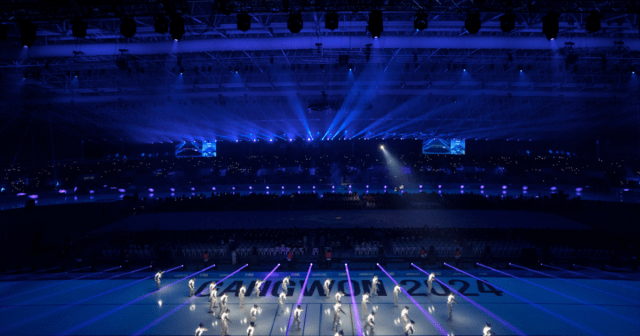

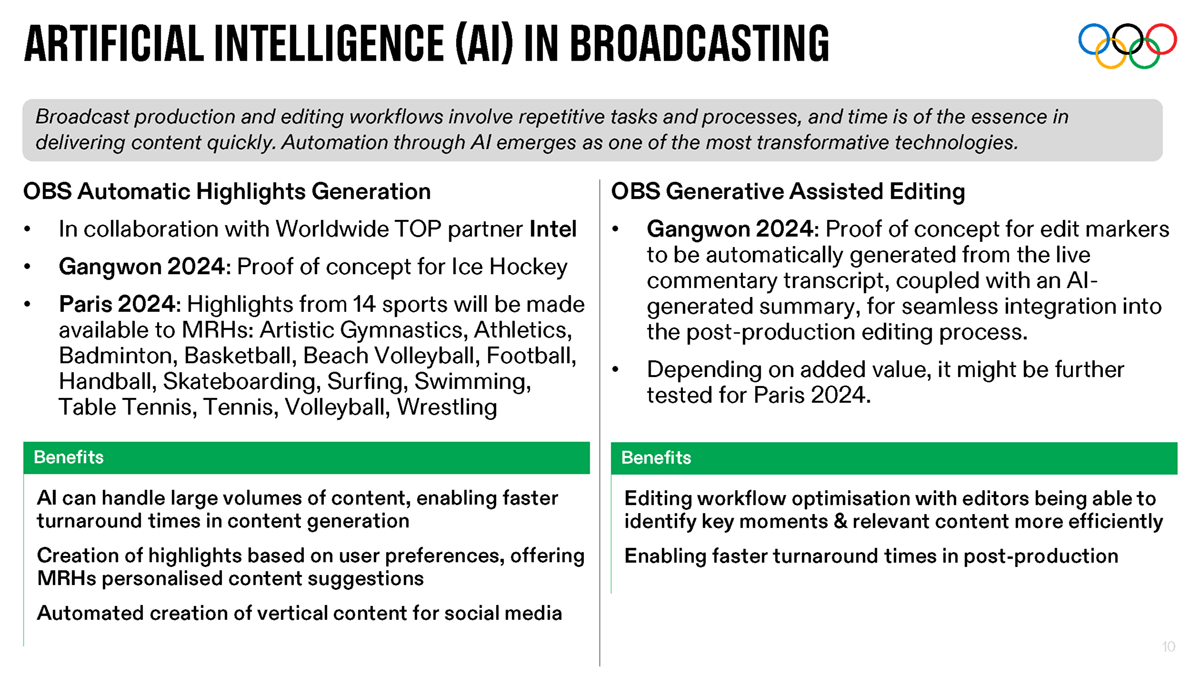

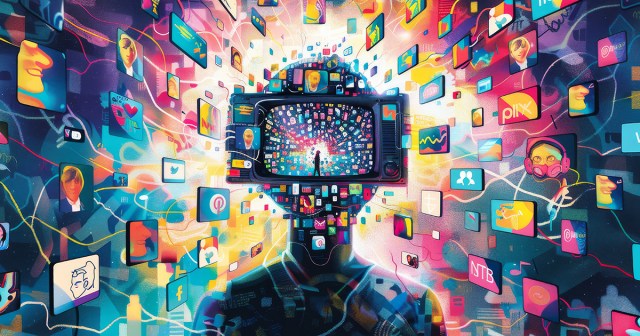

The 2024 Paris Olympics aren’t just a showcase of athletic excellence but also a testament to technological innovation. With AI at the forefront, the International Olympic Committee (IOC) and its partners are transforming how fans experience the Games. In Part 1 of NAB Amplify’s deep dive into the use of artificial intelligence at the 2024 Paris Games, “Transforming Olympic Broadcasting and Storytelling,” we examined how AI technology is revolutionizing live broadcast production at a mind-boggling scale. Here in Part 2, we explore how AI is enhancing fan interactions, improving accessibility, and creating immersive experiences both on-site and online.

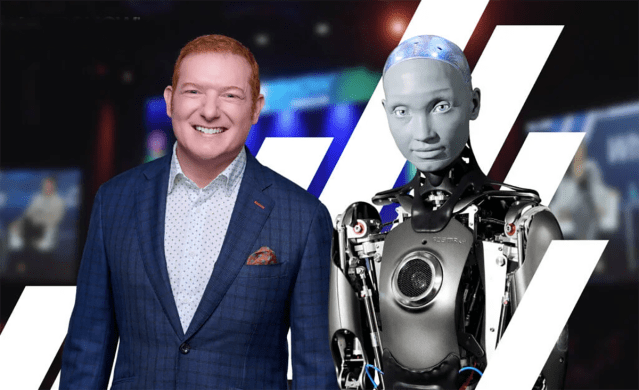

Key to this evolution are Intel, the Official AI Platform Partner for Paris 2024, and Google, the Official Search AI Partner of Team USA. Intel’s advanced AI technologies are powering various enhancements across the Games, including accessibility features, content management, and real-time data processing, while Google’s AI-powered features, integrated into NBCUniversal’s coverage, are elevating the fan experience.

These collaborations are setting new standards for engagement and interaction, ensuring that every fan, whether watching from home or attending in person, feels connected to the Games in a meaningful and personalized way.

READ MORE: Intel unveils AI-Platform Innovation for Paris 2024 (IOC)

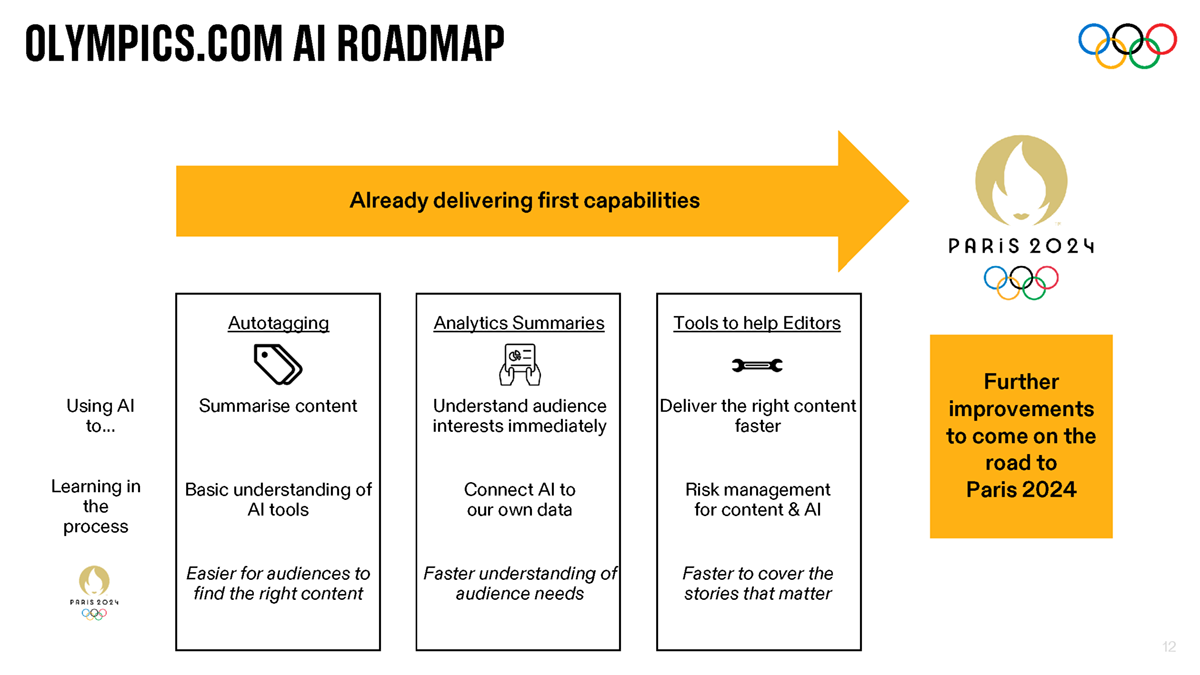

From AI-driven athlete stories to immersive 3D broadcasts, the technological innovations being deployed for Paris 2024 are set to transform the viewer experience. Within the framework provided by the IOC’s Olympic AI Agenda, developed in partnership with consultancy Deloitte, artificial intelligence is pivotal in making the Olympics more engaging, accessible and immersive for fans worldwide. And beyond enhancing this year’s Olympic experience, these technologies are paving the way for the future of sports broadcasting and fan engagement.

READ MORE: IOC takes the lead for the Olympic Movement and launches Olympic AI Agenda (IOC)

AI-Driven Fan Engagement

“There’s a lot of things we are doing with the IOC that will be behind the scenes as they release new functionality to the world,” John Tweardy, managing partner of Deloitte’s Olympic & Major Events Practice, tells Amber Jackson at Technology Magazine, “and one of those is around marketing and fan engagement.”

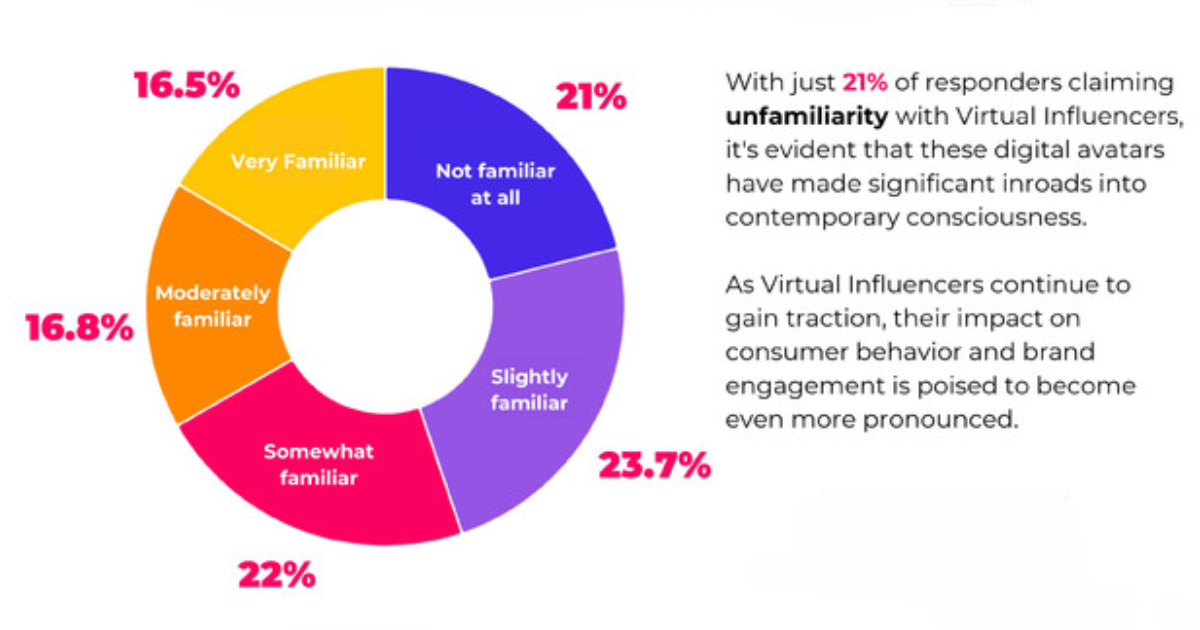

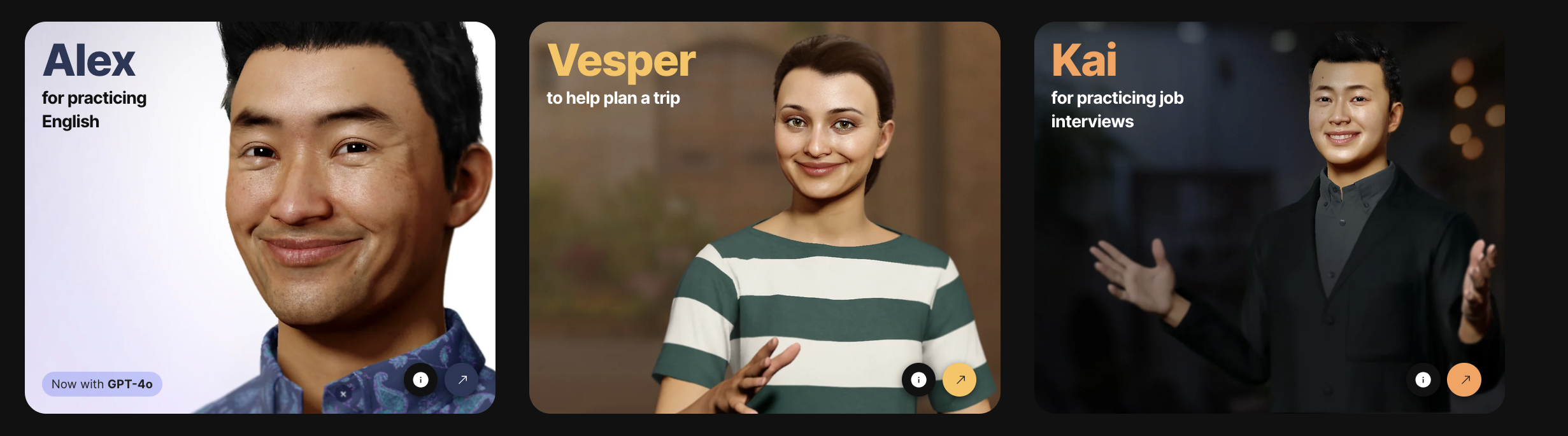

A primary element is what’s called a “fan data platform,” which was developed for “personalized activations,” such as informing fans of upcoming moments, updates and highlights, along with personalized video content and promotions.

“By helping the IOC develop its fan data platform strategy, Deloitte aims to support the IOC’s goal of empowering people to immerse themselves in content that inspires them, with the end goal of customizing each fan’s digital journey and enabling them to focus on the sports they love and the athletes they follow,” Jackson writes.

“You don’t want to get information that’s generic, you want to get information that is tailored to you,” Tweardy points out. “The IOC can now engage in intentional marketing to a fan of a particular sport. Then, from the top ecosystem, they can work with their partners and help the ecosystem gain more value, making them more precise.”

READ MORE: Power Behind Paris 2024: Deloitte Pushes Olympics Innovation (Technology Magazine)

Google’s partnership with Team USA and NBCUniversal is also pivotal in enhancing the Olympic experience for fans. This collaboration, designed to ensure that viewers and fans can explore and understand the Olympic and Paralympic Games through advanced AI tools, including Google Search, Gemini and the Google Maps Platform, leverages AI-powered features to bring athlete stories and the competitions of the Paris Games to life.

“This partnership is more than just a sponsorship; it’s a powerful alliance that brings together the best of technology and sports,” Sarah Hirshland, CEO of the US Olympic & Paralympic Committee, commented. “By working with Google and NBCUniversal, we are ensuring that our athletes’ stories are told in the most dynamic and engaging ways possible.”

One of the standout features of Google’s partnership with NBCU is “Explain the Games,” which will utilize Google Search’s AI Overviews. These overviews, designed to help viewers explore and understand their questions about the Olympic and Paralympic Games with a single search, will be showcased by NBCUniversal’s production team and commentators during daytime and primetime coverage. For example, viewers can learn about the importance of lane assignments in swimming or the technicalities of scoring in gymnastics. This feature will also extend to NBCUniversal’s social media accounts, amplifying its reach and engaging Olympic enthusiasts by anticipating their most pressing questions.

READ MORE: Google, Team USA and NBCUniversal Strike New Partnership (NBCUniversal)

During NBCUniversal’s coverage of the Paris Games, viewers will be able to follow chief superfan commentator Leslie Jones as she interacts with the Olympic Games using Google’s Gemini AI Assistant. This AI-powered assistant will help Jones come up with custom moves, learn new sports, and share her knowledge and enthusiasm with fans across NBCU’s networks and Peacock.

The partnership will also feature social videos and late-night promos showcasing Olympians and Paralympians exploring Paris. Utilizing Google Lens, Circle to Search, Immersive View in Google Maps, and Gemini, these athletes will highlight the AI-powered features that enhance their exploration of the host city. Iconic landmarks like the Eiffel Tower and Stade Roland Garros will serve as backdrops for these explorations, providing fans with a unique, behind-the-scenes look at the Olympic experience.

Enhancing Accessibility and Immersion

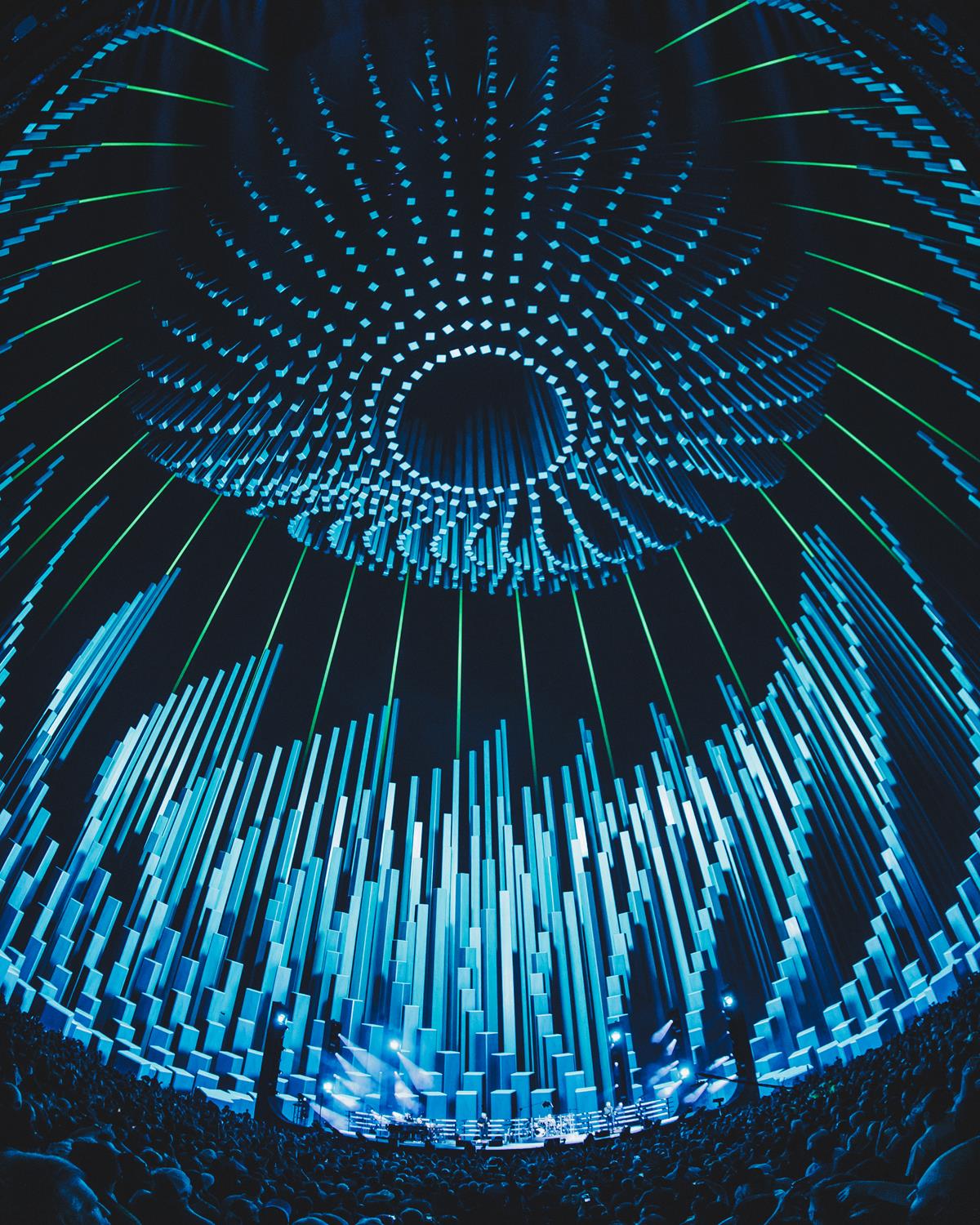

One major goal of the 2024 Paris Olympics is to offer an inclusive and engaging experience for all fans, and Intel’s advanced AI technologies are instrumental in making this vision a reality.

Intel’s AI technologies are powering real-time captions and subtitles for live coverage, which significantly improves accessibility for viewers with hearing impairments and those who prefer watching with subtitles, allowing them to fully engage with the Olympic events.

Intel’s technology is also driving advances in universal accessibility for the visually impaired. Leveraging AI built on Intel Xeon, 3D models of both the Team USA High Performance Centre in Paris and the International Paralympic Committee headquarters in Bonn, Germany, will allow indoor and voice navigation via a smartphone application.

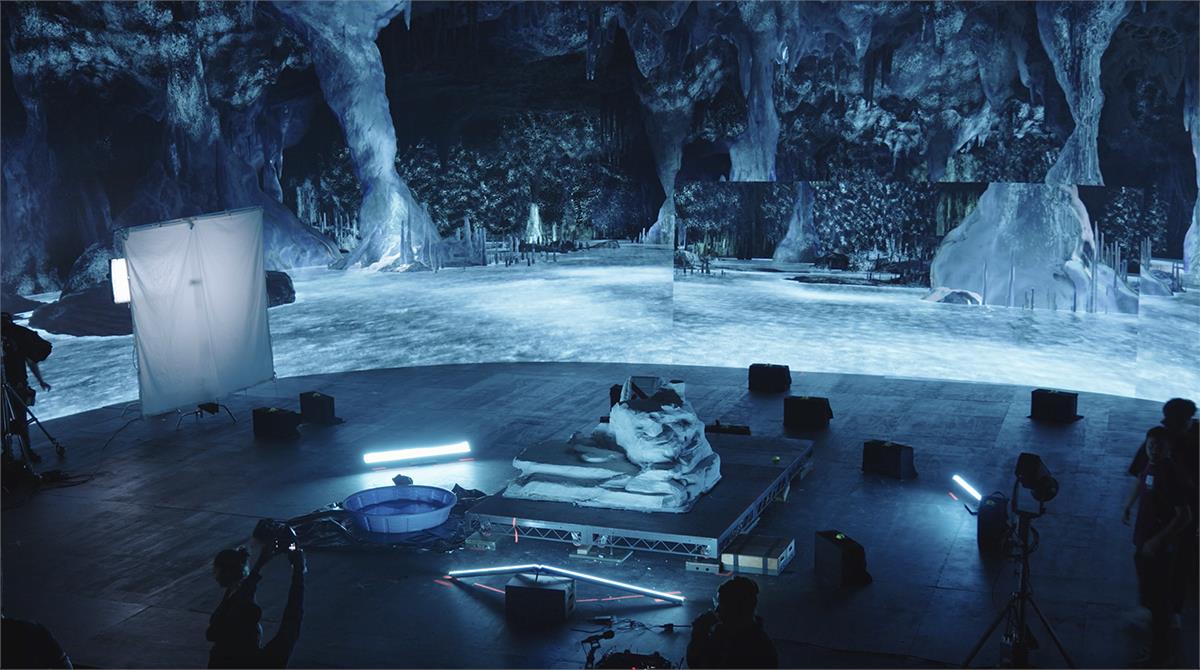

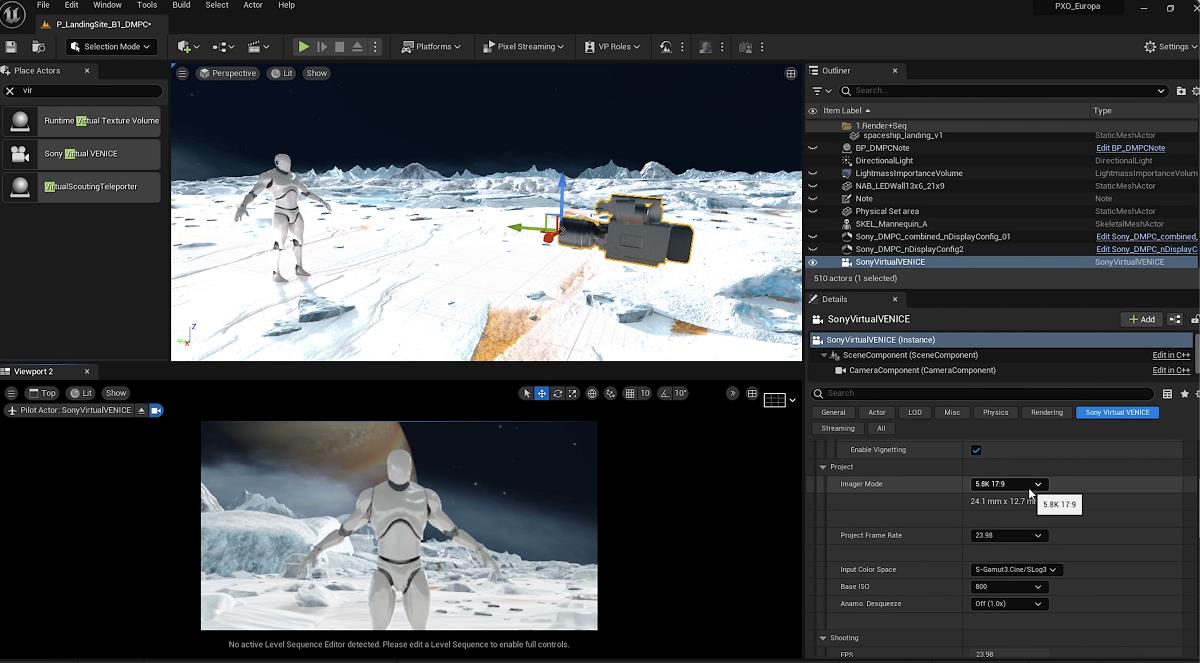

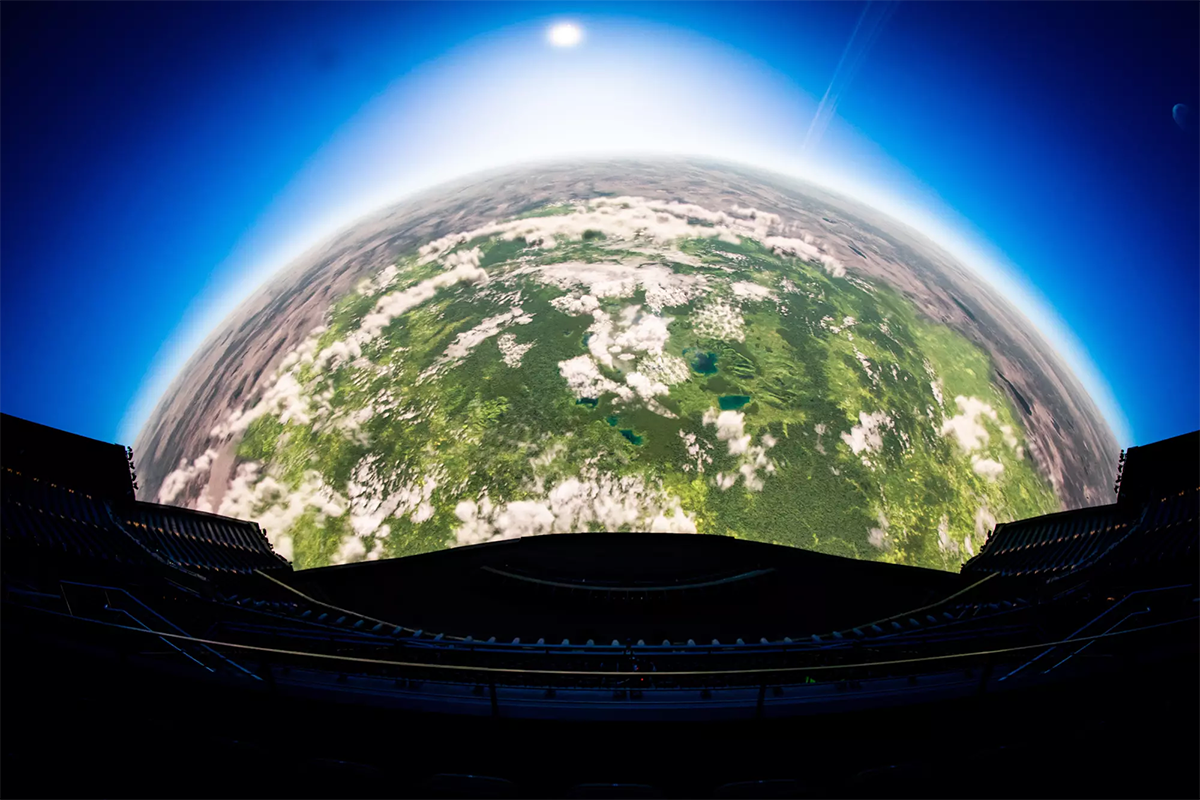

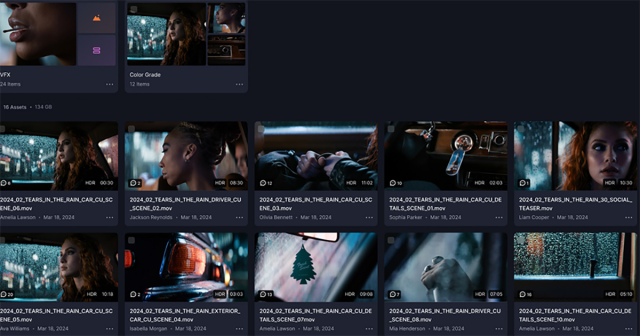

Another key element of the IOC’s plans for Paris 2024 is an interactive, AI-powered fan activation to take spectators on a journey of becoming an Olympic athlete. Trained on Intel Gaudi accelerators, running on Intel Xeon processors with built-in AI acceleration, and optimized with Intel OpenVINO, the experience will use AI and computer vision to analyze athletic drills and match each participant’s profile to a specific Olympic sport.

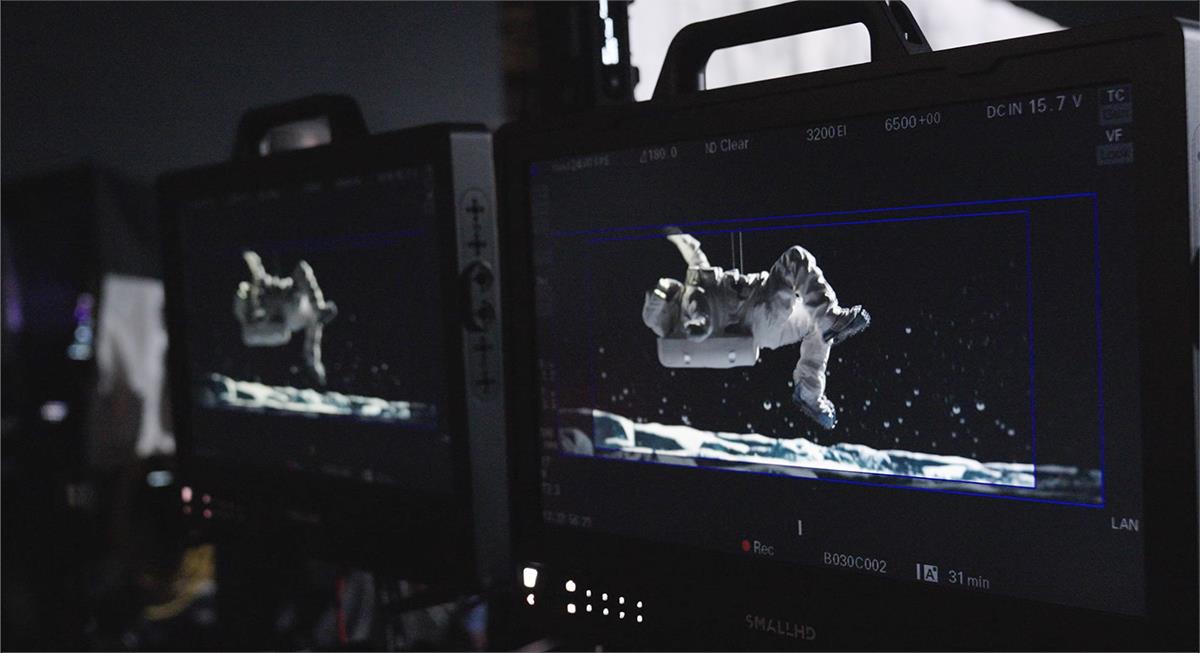

Intel’s AI is also harnessed to process data from various biometric and other sensors deployed throughout the event. This real-time data processing provides viewers with insights into athletes’ performances, offering a deeper understanding and appreciation of the competitions.

“Our partnership with Intel has propelled us into a realm where emerging technologies, powered by artificial intelligence, are reshaping the world of sport for spectators, athletes, IOC staff and Partners,” said Ilario Corna, the IOC’s chief information technology officer. “Through their AI-powered solutions, Intel has enabled us to deploy AI faster than ever before. Together, in Paris, our collaboration will create an Olympic experience like never before, embodying our shared commitment to building a better world through sport.”

READ MORE: Intel unveils AI-Platform Innovation for Paris 2024 (IOC)

Broader Impact and Future Trends

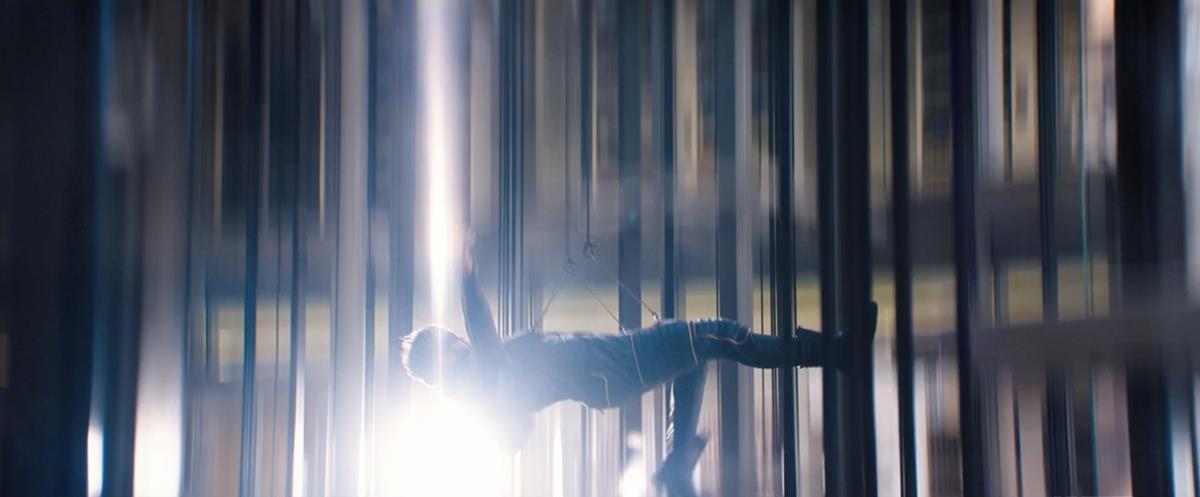

The integration of AI into the Olympic Games is a significant milestone that demonstrates the potential of technology to transform how we experience sports. The advancements seen at Paris 2024 are likely to influence how AI is utilized in future sporting events, driving further innovations in broadcast quality, interactivity, and fan engagement.

“This one-of-a-kind partnership demonstrates the endless possibilities when you combine innovative technology with premium content to enhance the viewing experience for fans of all ages,” says Dan Lovinger, president of Olympic & Paralympic Partnerships at NBCUniversal.

The successful implementation of AI technologies at the Paris 2024 Olympics sets a precedent for future sporting events. The ability to deliver real-time data, immersive broadcasts, and interactive features is likely to become a standard expectation for viewers. This shift will drive further investment in AI and other advanced technologies, pushing the boundaries of what is possible in live production and sports broadcasting.

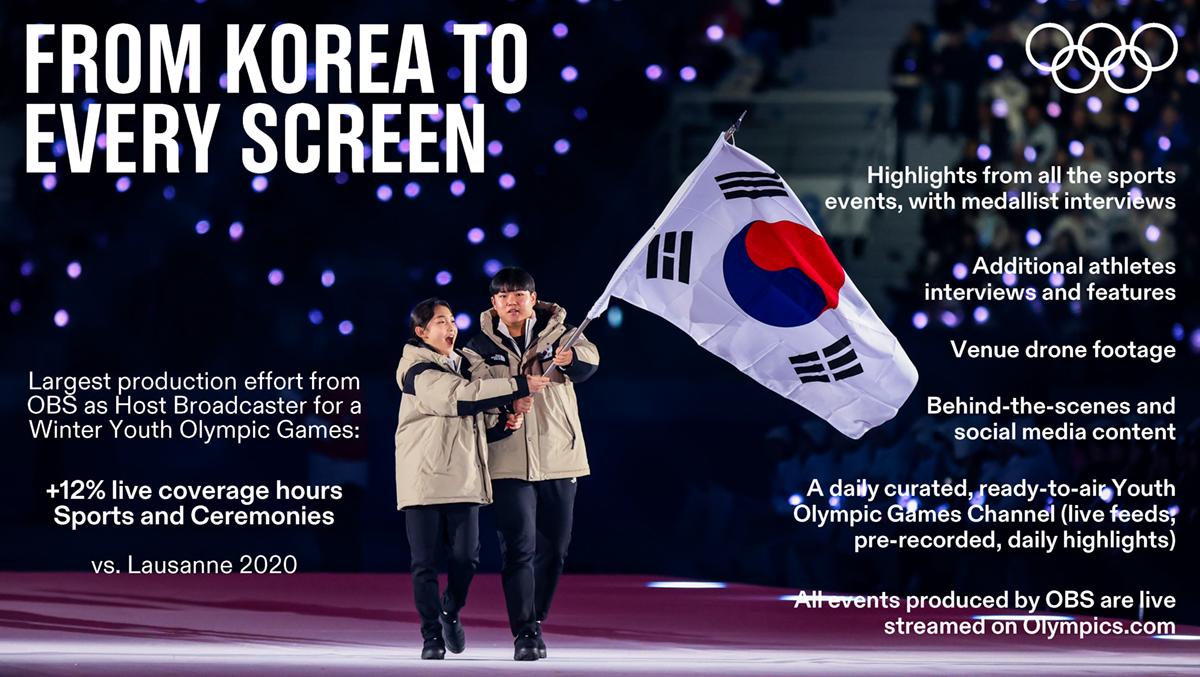

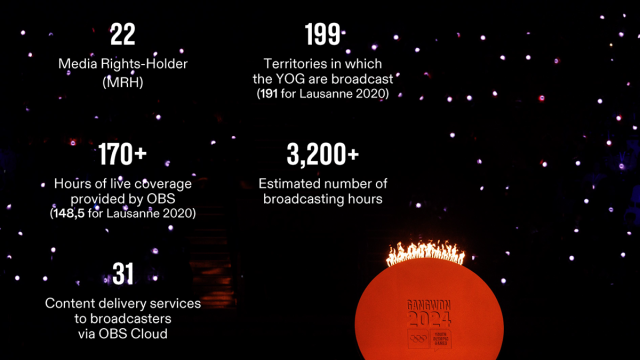

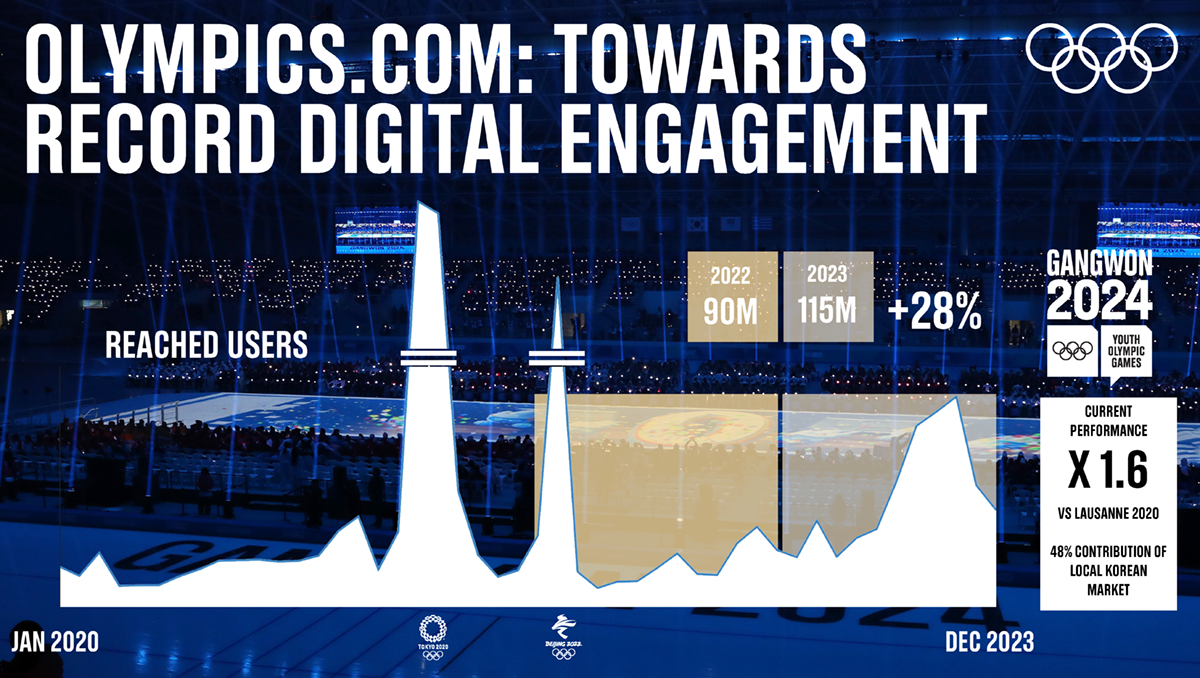

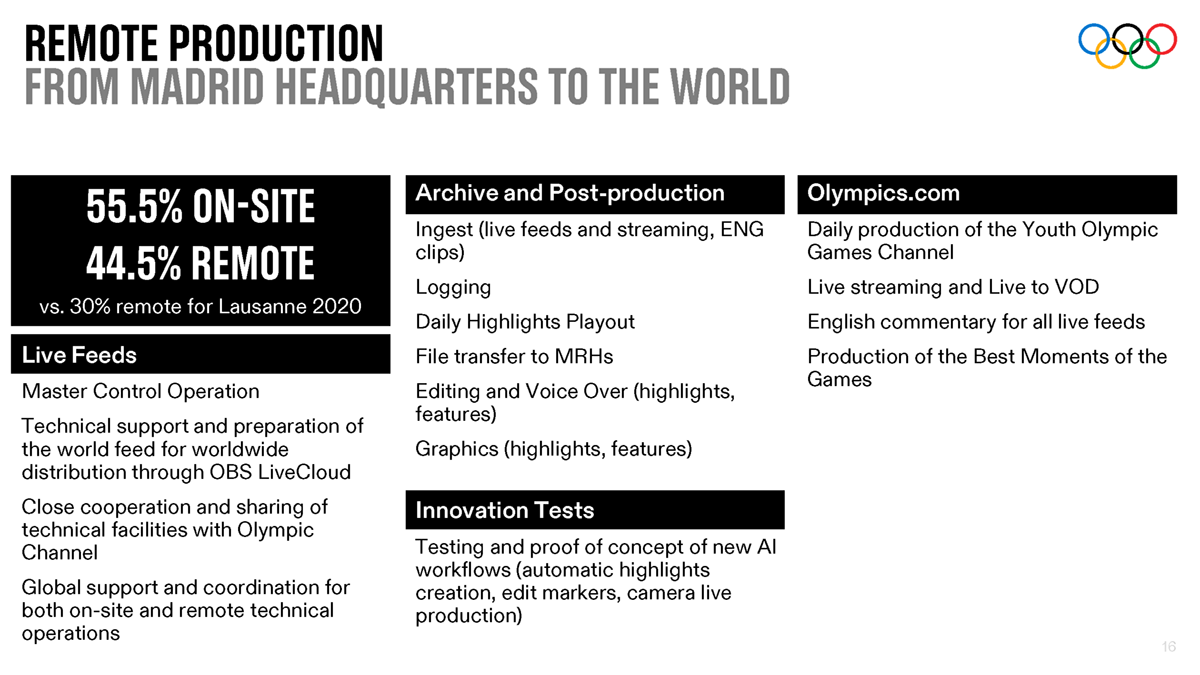

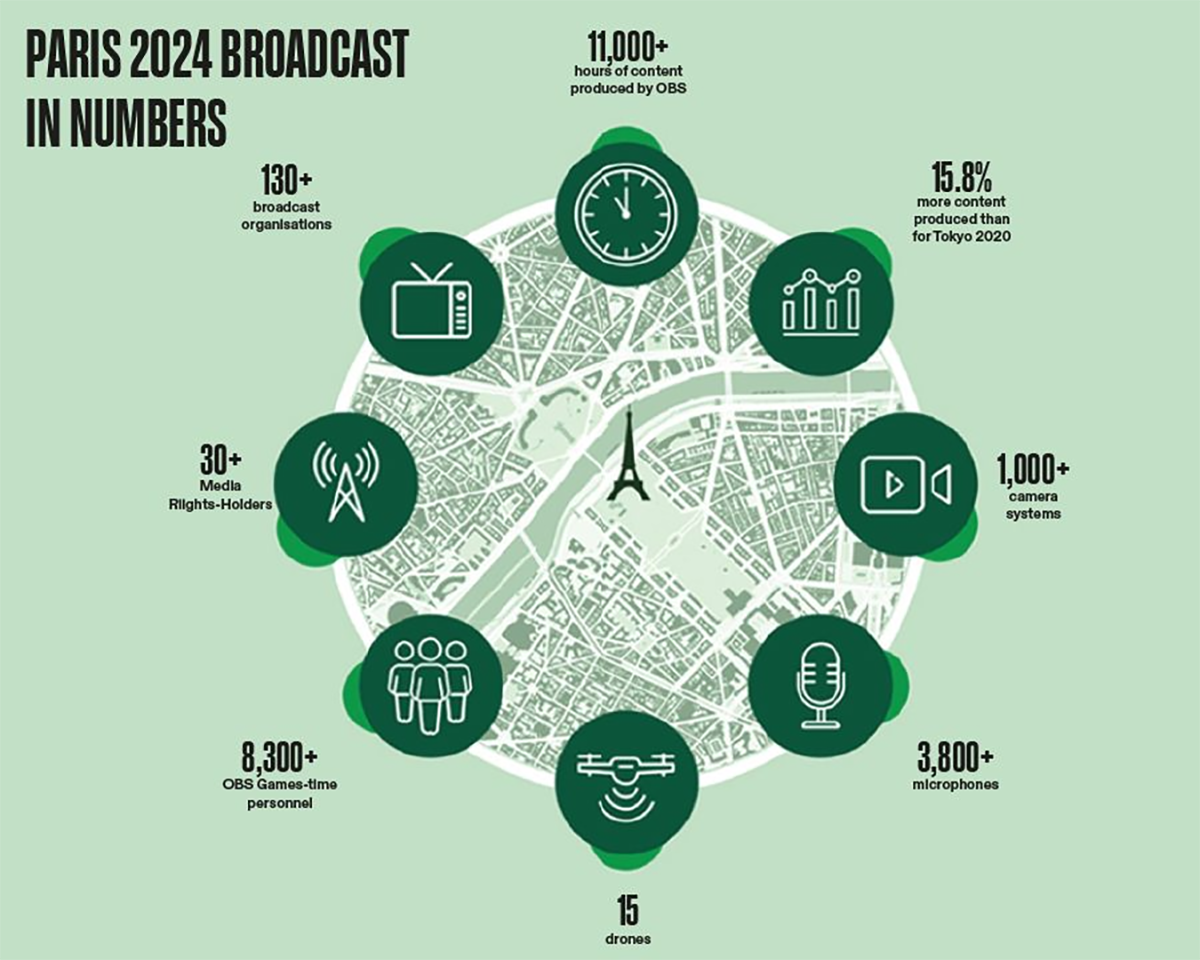

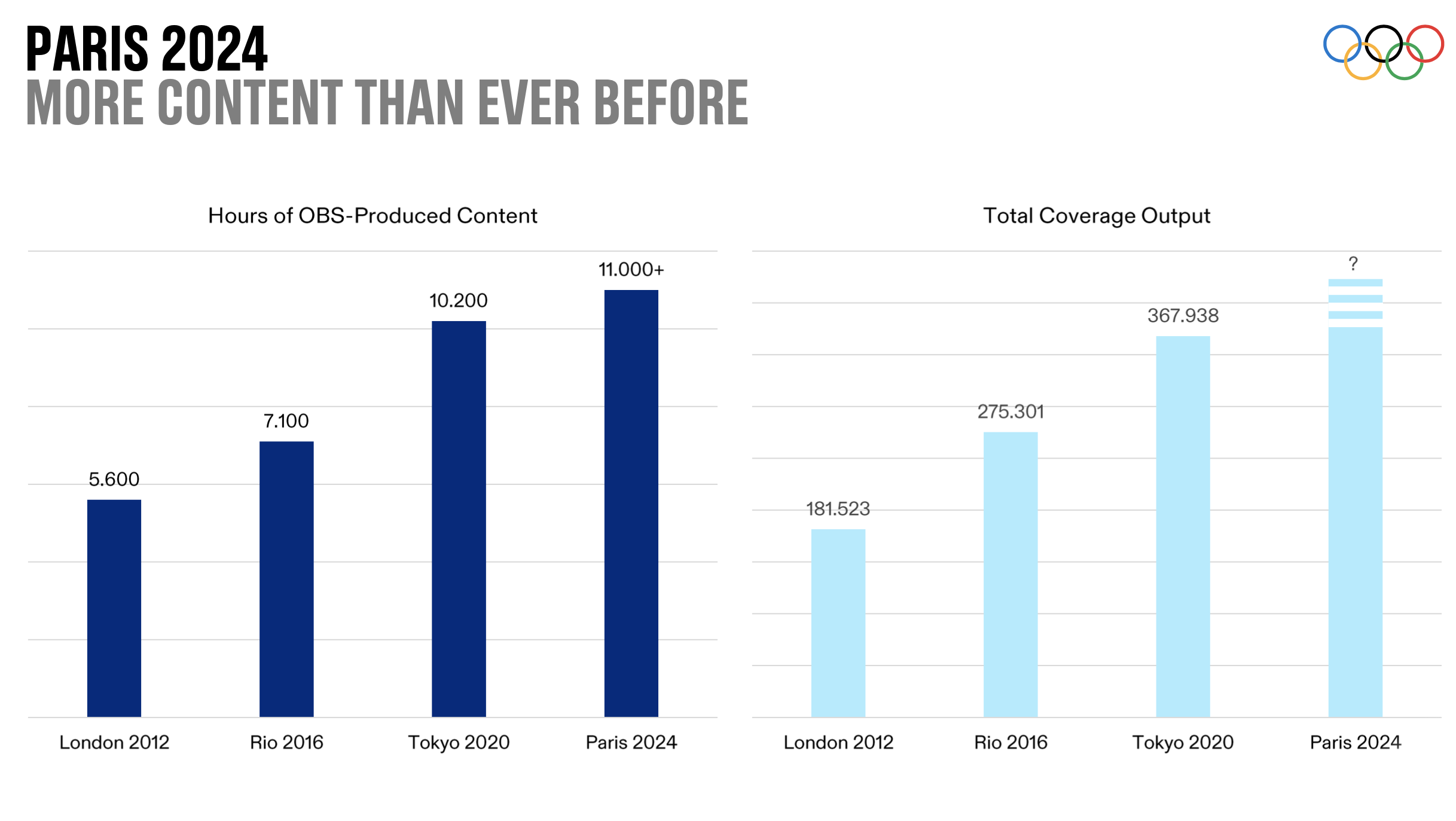

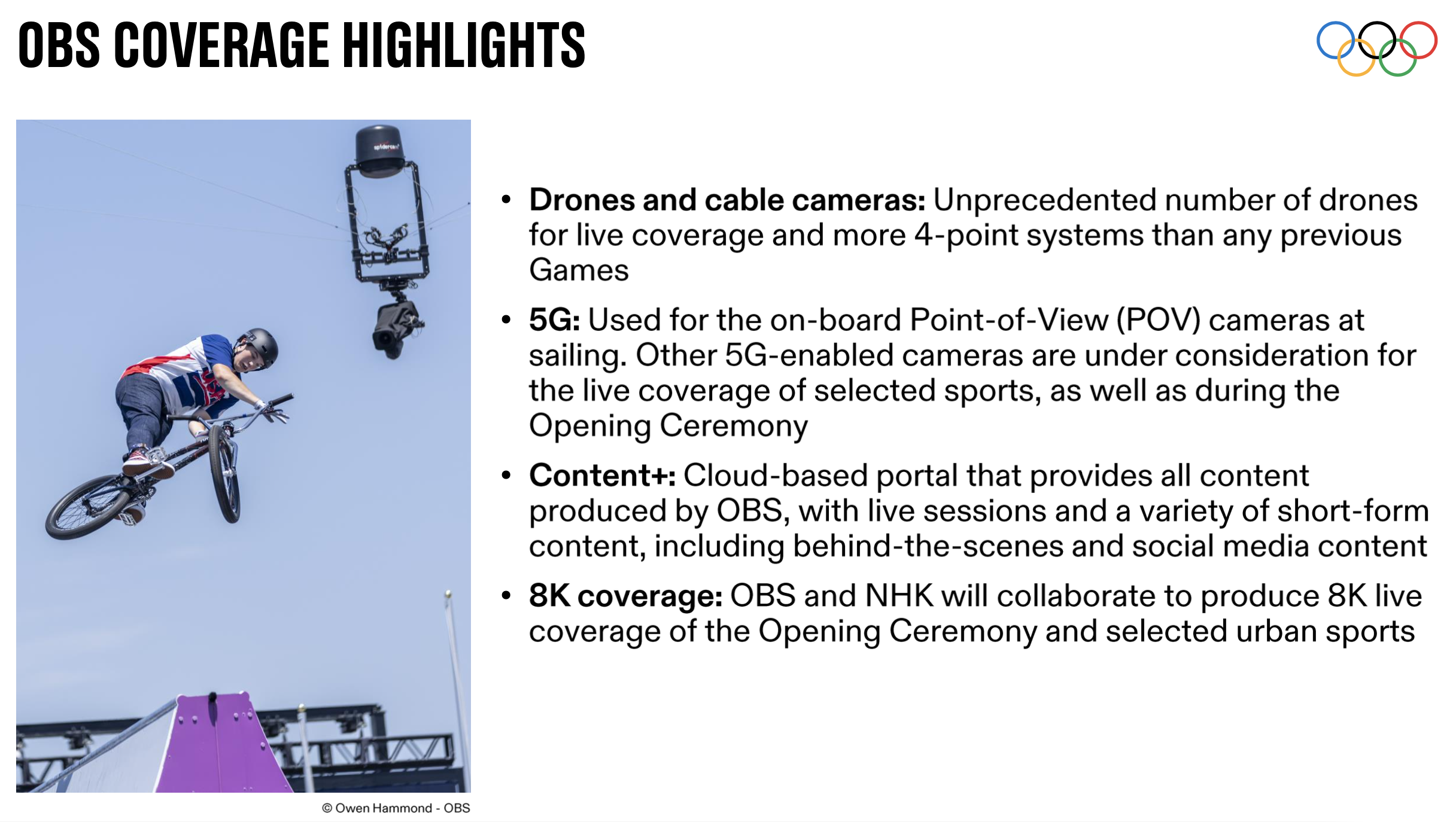

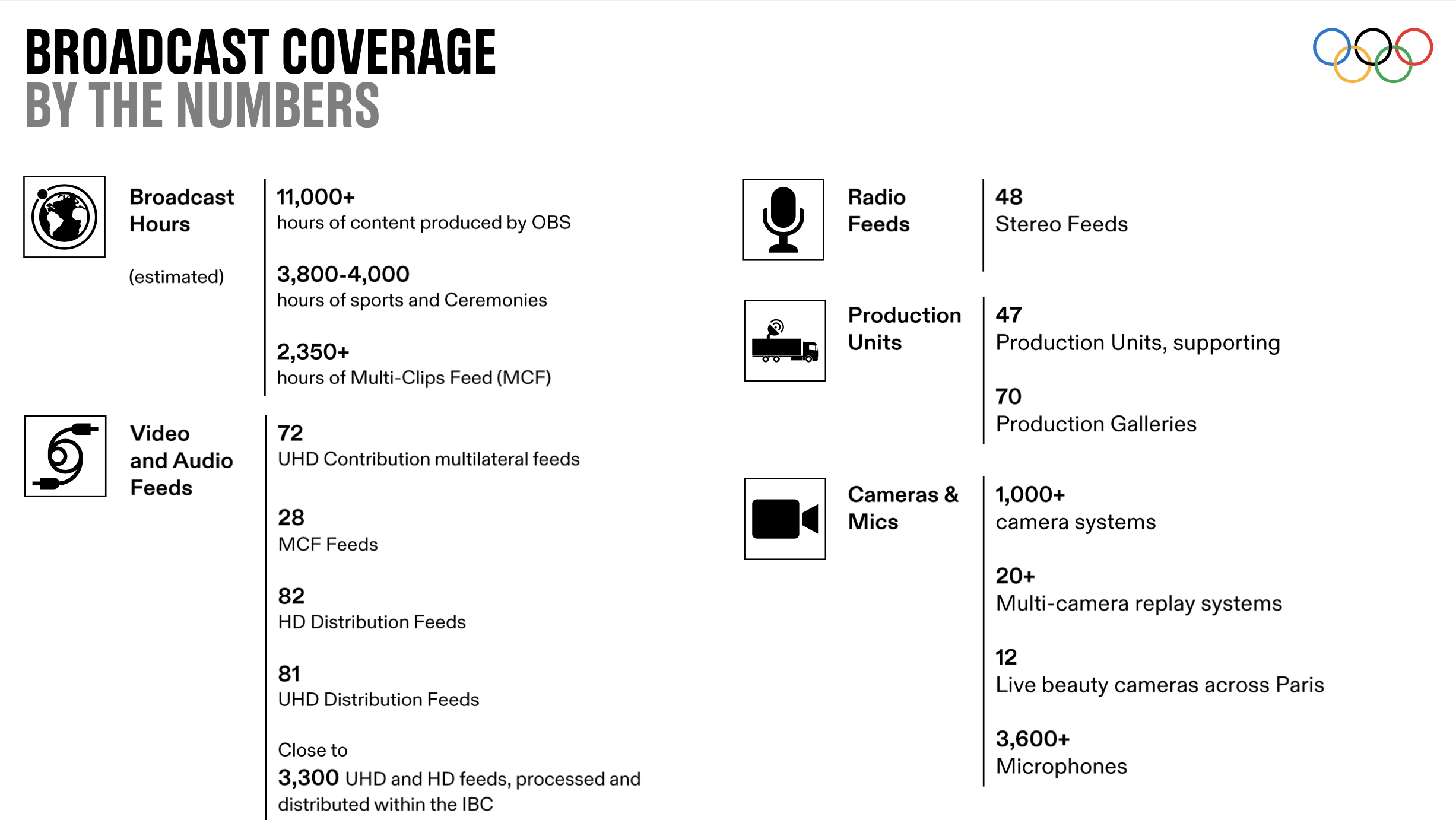

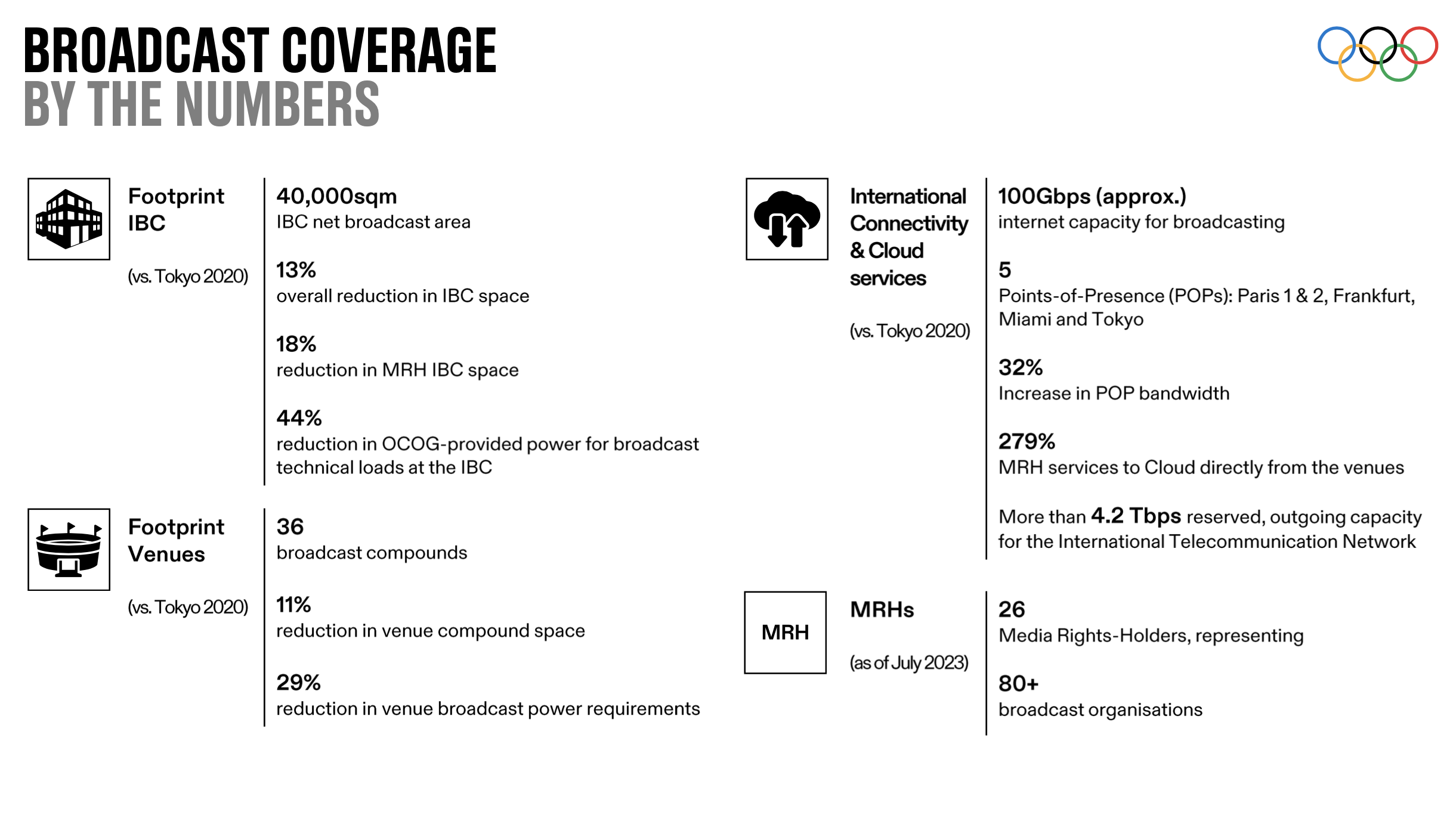

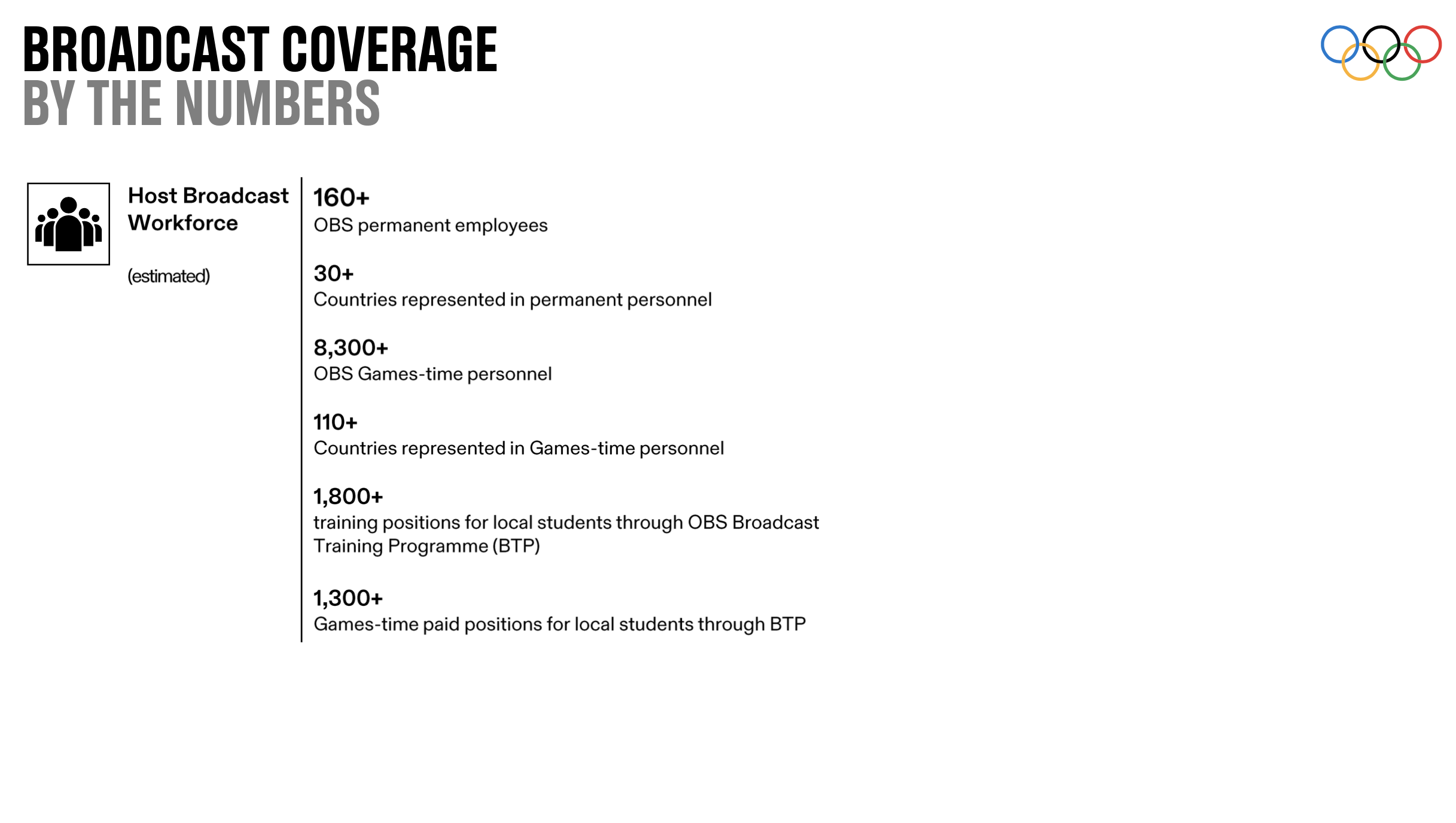

Be sure to check out Part 1 of NAB Amplify’s deep dive into AI at the 2024 Paris Games, “AI at the 2024 Paris Games: Transforming the Olympics Broadcast,” exploring how AI technology is helping Olympic Broadcasting Services deliver more than 11,000 hours of content to fans from around the world.

READ MORE: AI at the 2024 Paris Games: Transforming the Olympics Broadcast (NAB Amplify)

Why subscribe to The Angle?

Exclusive Insights: Get editorial roundups of the cutting-edge content that matters most.

Behind-the-Scenes Access: Peek behind the curtain with in-depth Q&As featuring industry experts and thought leaders.

Unparalleled Access: NAB Amplify is your digital hub for technology, trends, and insights unavailable anywhere else.

Join a community of professionals who are as passionate about the future of film, television, and digital storytelling as you are. Subscribe to The Angle today!