TL;DR

- Synthetic media is already changing the creative process as artists (and non-artists) utilize AI for production assistance.

- Generative AI can now create text and videos, and even clone people’s voices, but some experts fear it could contribute to the spread of misinformation and fake news.

- The next evolution is data-driven synthetic media — created in near-real time and surfaced in place of traditional media that could see hyper-personalized ads created and delivered within seconds to your phone.

READ MORE: Generative AI Is Revolutionizing Content Creativity, but at What Cost? (The Media Line)

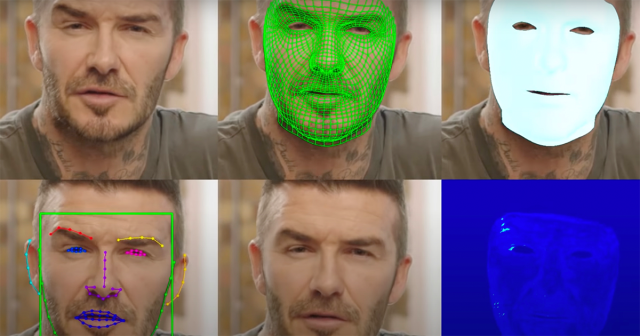

Synthetic media, sometimes referred to as “deepfake” technology, is already changing the creative process as artists (and non-artists) conscript AI for production assistance. From videos to books and customizable stock images and even cloned voice recordings, we can now create an infinite amount of content in seconds with the latest generative AI technology.

Israeli firm Bria helps users to make their own customizable images, in which everything from a person’s ethnicity to their expression can be easily modified. It recently partnered with stock photo image giant Getty Images.

“The technology can help anyone to find the right image and then modify it: to replace the object, presenter, background, and even elements like branding and copy. It can generate [images] from scratch or modify existing visuals,” Yair Adato, co-founder and CEO at Bria, told Maya Margit at The Media Line.

Another Israeli startup, D-ID Creative Reality Studio, enables users to make videos from still images. It is working with clients, including Warner Bros.

“With generative AI we’re on the brink of a revolution,” Matthew Kershaw, VP of commercial strategy at D-ID, tells Margit. “It’s going to turn us all into creators. Suddenly instead of needing craft skills, needing to know how to do video editing or illustration, you’ll be able to access those things and actually it’s going to democratize that creativity.”

Kershaw believes that soon, people will even be able to produce feature films at home with the help of generative AI.

In fact, analyst Gartner has put a timeline of just seven years on that. It expects that by 2030 a major blockbuster film will be released with 90% of the film generated by AI (from text to video).

AI in Marketing

The rise of synthetic media has made it easier than ever to produce deepfake audio and video. Microsoft researchers, for instance, recently announced that their new AI-based application can clone a person’s voice with just seconds of training. Called VALL-E, the app simulates a person’s speech and acoustic environment after listening to a three-second recording.

Such generative models are potentially valuable across a number of business functions, but marketing applications are perhaps the most common.

DALL-E 2 and other image generation tools are already being used for advertising. Heinz, Nestle and Mattel are among brands to be using the technology to generate images for marketing.

In fact, the global generative AI market is expected to reach $109.37 billion by 2030, according to a report by Grand View Research.

Jasper, for example, a marketing-focused version of GPT-3, can produce blogs, social media posts, web copy, sales emails, ads, and other types of customer-facing content.

At the cloud computing company VMWare, for example, writers use Jasper as they generate original content for marketing, from email to product campaigns to social media copy. Rosa Lear, director of product-led growth, tells Thomas Davenport at Harvard Business Review, how Jasper helped the company ramp up our content strategy, and the writers now have time to do better research, ideation, and strategy.

Kris Ruby, owner of a PR agency, also tells Davenport that her company is now using both text and image generation from generative models. When she uses the tools, she says, “The AI is 10%, I am 90%” because there is so much prompting, editing, and iteration involved.

She says feels that these tools make one’s writing better and more complete for search engine discovery, and that image generation tools may replace the market for stock photos and lead to a renaissance of creative work.

By 2025, Gartner predicts that 30% of outbound marketing messages from large organizations will be synthetically generated, up from less than 2% in 2022.

READ MORE: Beyond ChatGPT: The Future of Generative AI for Enterprises (Gartner)

Coming Soon: Generative Synthetic Media

The next evolution is being dubbed Generative Synthetic Media (GSM). This is defined by Shelly Palmer, professor of advanced media at the Newhouse School of Public Communications, as data-driven synthetic media — created in near real time and surfaced in place of traditional media.

This could happen very soon following “an explosion” of applications built over large language models (LLM) such as GPT-3, BLOOM, GLaM, Gopher, Megatron-Turing NLG, Chinchilla, and LaMDA.

Again, it is in marketing and advertising where the biggest impact will be felt first. This would range from AI-driven data collection to target specific audiences to the creation of tailored ads all in near-real time.

Palmer supposes that a natural language generation (NLG) platform would generate a script, that content will be hyper-personalized (rather than targeting larger audience segments), and would automatically optimize content for social media, email or display ads and then continuously monitor the performance, ensuring that the content remains relevant and effective.

“It will not be long until the ideas described in this article are simply table stakes,” Palmer declares. This will start with generative AI creating ad copy and still images (GPT-3 and Midjourney APIs), and then we’ll start to hear voice-overs and music. Next we’ll start to see on-the-fly deepfake videos, and ultimately, all production elements will be fully automated, created in near real time, and delivered.”

He thinks this will take “more than a year, less than three years.”

READ MORE: Generative Synthetic Media: The Future of the Creative Process (Shelly Palmer)

As it stands today, to use generative AI effectively you still need human involvement at both the beginning and the end of the process. As a rule, creative prompts yield creative outputs. The field has already led to a prompt marketplace where one can buy other users’ prompts for a small fee.

Davenport thinks that “Prompt Engineer” is likely to become an established profession, at least until the next generation of even smarter AI emerges.

Deepfake Concerns

Synthetic media is also sometimes referred to as “deepfake” technology, which brings with it more worrying connotations. These concerns raise from identification of authorship and copyright infringement to the ethical muddy waters of fake news and training AIs on datasets biased in terms of race or gender.

LLMs, for example, are increasingly being used at the core of conversational AI or chatbots. Even Facebook’s BlenderBot and Google’s BERT “are trained on past human content and have a tendency to replicate any racist, sexist, or biased language to which they were exposed in training,” reports Davenport. “Although the companies that created these systems are working on filtering out hate speech, they have not yet been fully successful.”

READ MORE: How Generative AI Is Changing Creative Work (Harvard Business Review)

AI in Law

An Australian court ruled in 2021 in favor of AI inventorship (i.e., the AI system could be named as the inventor on a patent application). However, this has been overturned by the Australian Federal Court. Law firm Dentons expects to see lots of developments and change globally on this issue.

In the US, a blueprint for an AI Bill of Rights has been introduced and proposes national standards regarding personal data collected by companies and AI decision-making. According to Dentons, further regulation of AI decision-making is likely to see continued focus from the federal government following the publication of a blueprint for an AI Bill of Rights by the White House Office of Science & Technology Policy.

The EU is already going further and is seeking to benchmark restrictions on the use of AI just as it did successfully with GDPR in its jurisdiction. Expected to be finalized in 2023, the EU AI Act will categorize AI as either being an unacceptable, high or low/minimal risk.

As explained by Dentons, unacceptable-risk AI systems include, for example, subliminal, manipulative or exploitative systems that cause harm.

The law firm says, “We look forward to 2023 being a fruitful year in terms of the increase in scope for AI deployment, and also inevitable regulation, with the possible exponential increase in legal disputes relating to AI.”

Discussion

Responses (1)