TL;DR

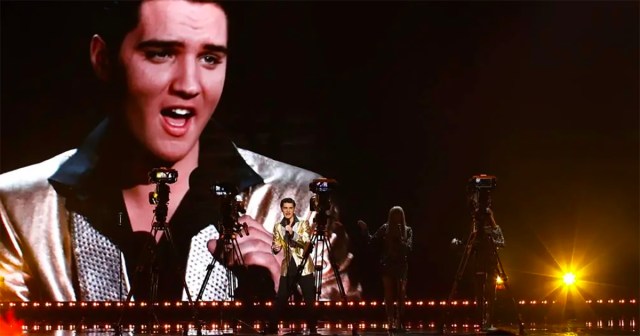

- Methods that can alter and synthesize video are increasingly accessible and often easily learned.

- The existence of deepfakes is being used as an accusation and an excuse by those hoping to discredit reality and dodge accountability.

- “The danger of manipulated media lies in the way it risks further damaging the ability of many social media users to depend on concepts like truth and proof,” writes Tiffany Hsu.

READ MORE: Worries Grow That TikTok Is New Home for Manipulated Video and Photos (The New York Times)

Manipulated video, deepfakes, and other synthesized material are cropping up all over the internet with alarming regularity. It’s no longer easy to identify it. This has serious implications for anyone interested in stopping the spread of political falsehoods and conspiracy theories.

TikTok is considered the worst culprit, primarily because of its huge influence over internet users.

“TikTok is literally designed so media can be mashed together — this is a whole platform designed for manipulation and remixing,” Francesca Panetta, creative director for MIT’s Center for Advanced Virtuality, said in an interview with The New York Times’ Tiffany Hsu.

“What does fact-checking look like on a platform like this?”

To show what can be twisted into truth, and how easily, Panetta and colleague Halsey Burgund collaborated on a documentary that engineered a deepfake Richard Nixon announcing the failure of the 1969 Apollo 11 mission. The project, In Event of Moon Disaster, won an Emmy last year.

Panetta spoke with Hsu about their examination of deepfake disinformation.

“More than any single post, the danger of manipulated media lies in the way it risks further damaging the ability of many social media users to depend on concepts like truth and proof,” Hsu writes. “The existence of deepfakes, which are usually created by grafting a digital face onto someone else’s body, is being used as an accusation and an excuse by those hoping to discredit reality and dodge accountability — a phenomenon known as the liar’s dividend.”

According to Hsu, lawyers for at least one person charged in the January 6 riot at the US Capitol in 2021 have tried to cast doubt on video evidence from the day by citing “widely available and insidious” deepfake-making technology.

Over time, the fear is that manipulations will become more common and more difficult to detect. A 2019 California law made it illegal to create or share deceptive deepfakes of politicians within 60 days of an election — and yet, manipulation is rampant.

“Extended exposure to manipulated media can intensify polarization and whittle down viewers’ ability and willingness to distinguish truth from fiction,” Hsu warns.

In recent weeks, TikTok users have shared a fake screenshot of a nonexistent CNN story claiming that climate change is seasonal.

One video was edited to imply that the White House press secretary Karine Jean-Pierre ignored a question from the Fox News reporter Peter Doocy. Another video, from 2021, resurfaced this fall with the audio altered so that Vice President Kamala Harris seemed to say virtually all people hospitalized with Covid-19 were vaccinated (she had actually said “unvaccinated”).

READ IT ON AMPLIFY: Deepfake AI: Broadcast Applications and Implications

READ IT ON AMPLIFY: Now We Have an AI That Can Mimic Iconic Film Directors

READ IT ON AMPLIFY: Everything Is Fake. It Makes This an Interesting Time for Media.

Hany Farid, a computer science professor at the University of California, who sits on TikTok’s content advisory council, commented that, “When we enter this kind of world, where things are being manipulated or can be manipulated, then we can simply dismiss inconvenient facts.”

Methods that can alter and synthesize video are “increasingly accessible and often easily learned.” They include miscaptioning photos, cutting footage or changing its speed or sequence, splitting sound from images, cloning voices, creating hoax text messages, creating synthetic accounts, automating lip syncs and text-to-speech, or even making a deepfake.

Hsu continues, “Many TikTok users use labels and hashtags to disclose that they are experimenting with filters and edits. Sometimes, manipulated media is called out in the comments section. But such efforts are often overlooked in the TikTok speed-scroll.”

TikTok said in a statement it had removed videos, found by The New York Times, that breached its policies, which prohibit digital forgeries “that mislead users by distorting the truth of events and cause significant harm to the subject of the video, other persons or society.”

“TikTok is a place for authentic and entertaining content, which is why we prohibit and remove harmful misinformation, including synthetic or manipulated media, that is designed to mislead our community,” TikTok spokesperson Ben Rathe told Hsu.

A tech solution based on machine learning might be used to combat the sheer volume of fake videos. One of the solutions, developed by DeepMedia, has just been released. DeepMedia DeepFake (DMDF) Faces V1 is a publicly available dataset built to detect advanced deepfakes.

DeepMedia CEO Rijul Gupta proposed that, “As dangerous and realistic deepfakes continue to spread, our society needs accurate and accessible detectors like DMDF Faces V1 to protect truth and ethics.”

READ MORE: DeepMedia, a Leader in Advanced Synthetic Media, Releases Largest Publicly Accessible Deepfake Detection Dataset (newswires)

Without actual action by social media platforms — with a goal to make money rather than address ethical concerns about the use of its tech — you have to wonder if anything will stop the rot. Even now, conspiracy theorists like QAnon and Alex Jones can say black is white with impunity.

“Platforms like TikTok in particular, but really all of these social media feeds, are all about getting you through stuff quickly — they’re designed to be this fire hose barrage of content, and that’s a recipe for eliminating nuance,” MIT creative technologist resident Halsey Burgund said. “The vestiges of these quick, quick, quick emotional reactions just sit inside our brains and build up, and it’s kind of terrifying.”

Next, Watch This

SOCIAL MEDIA AND HUMANITY’S DIGITAL FUTURE:

Technology and societal trends are changing the internet. Concerns over data privacy, misinformation and content moderation are happening in tandem with excitement about Web3 and blockchain possibilities. Learn more about the tech and trends driving humanity’s digital future with these hand-curated articles from the NAB Amplify archives:

- The Social Media Trends That’ll Impact Your Business in 2023

- If Social Media Makes You Feel Some Type of Way, Then It’s Working

- Where We’re Headed Next With Social Media Marketing

- Social Media Is Making and Remaking Itself All the Time

- Is Recommendation Media the New Standard for Content Discovery?