READ MORE: Get ready for the next generation of AI (MIT Technology Review)

Only a few months ago the art world was agog at breakthroughs in text-to-image synthesis, but already new models have arrived capable of text-to-video. Advances in the field have been so swift that Meta’s Make-A-Video — announced just three weeks ago — looks basic.

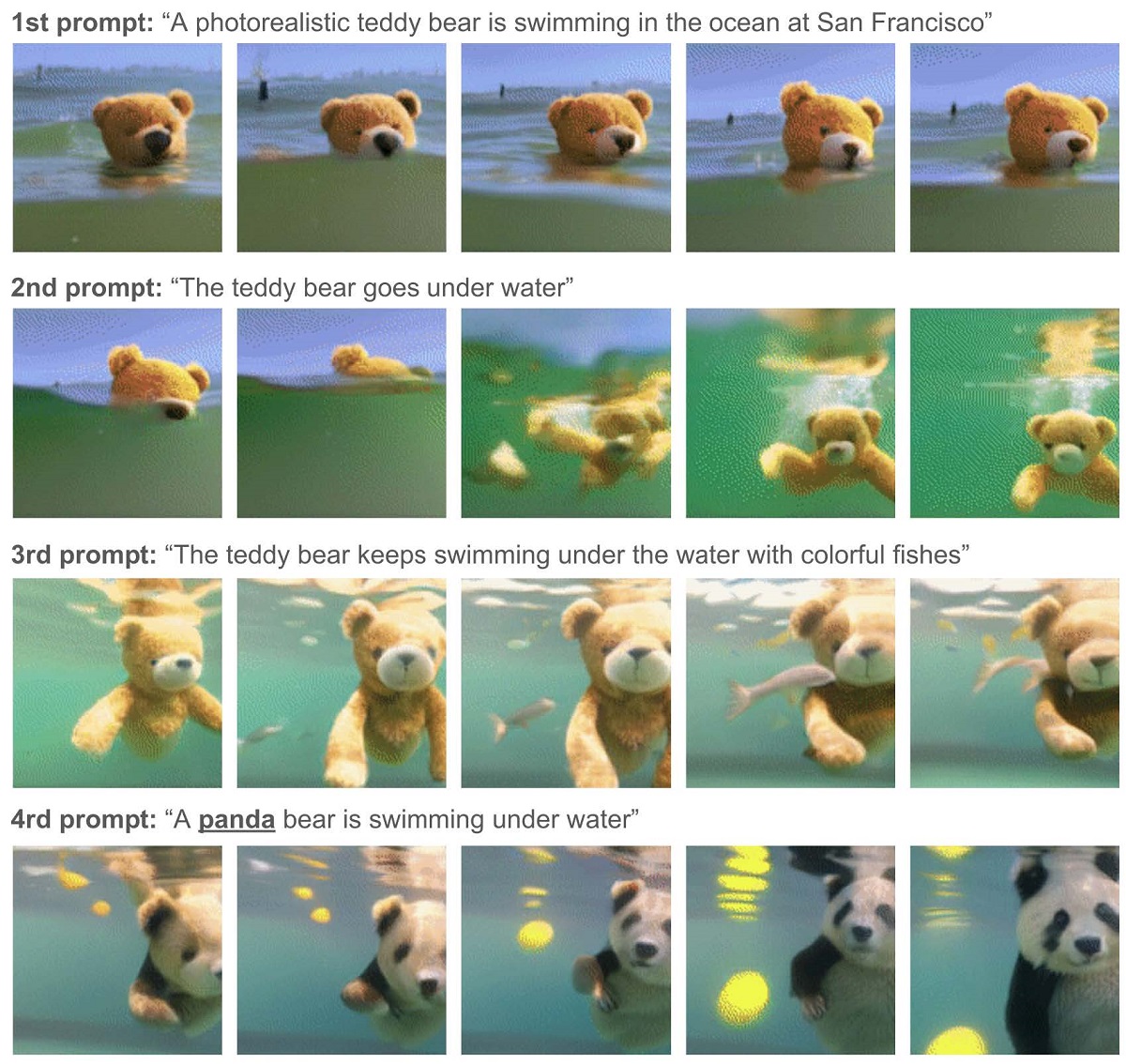

Another generative AI, called Phenaki, can create video from a still image and a prompt rather than a text prompt alone. It can also make far longer clips and users can create videos multiple minutes long based on several different prompts that form the script for the video. The example given by MIT Technology Review’s Melissa Heikkilä is of “a photorealistic teddy bear is swimming in the ocean at San Francisco. The teddy bear goes underwater. The teddy bear keeps swimming under the water with colorful fishes. A panda bear is swimming underwater.”

Heikkilä writes that “a technology like this could revolutionize filmmaking and animation. It’s frankly amazing how quickly this happened. DALL-E was launched just last year. It’s both extremely exciting and slightly horrifying to think where we’ll be this time next year.”

A white paper, “Phenaki: Variable Length Video Generation from Open Domain Textual Descriptions,” explains how generating videos from text is particularly challenging due to the computational cost, limited quantities of high-quality text-video data and variable length of videos. To address these issues, Phenaki compresses the video to a small representation of “discrete tokens.” The paper shares that “this tokenizer uses causal attention in time, which allows it to work with variable-length videos.”

“As the technology develops, there are fears it could be harnessed as a powerful tool to create and disseminate misinformation. It’s only going to become harder and harder to know what’s real online, and video AI opens up a slew of unique dangers that audio and images don’t, such as the prospect of turbo-charged deepfakes.”

— Melissa Heikkilä

It goes on to explain how it achieves a compressed representation of video. “Previous work on text to video either use per-frame image encoders or fixed length video encoders. The former allows for generating videos of arbitrary length, however in practice, the videos have to be short because the encoder does not compress the videos in time and the tokens are highly redundant in consecutive frames.

“The latter is more efficient in the number of tokens but it does not allow to generate variable length videos,” the paper says. “In Phenaki, our goal is to generate videos of variable length while keeping the number of video tokens to a minimum so they can be modeled… within current computational limitations.”

READ MORE: Phenaki: Variable Length Video Generation from Open Domain Textual Descriptions (Phenaki)

Google has also created a text-to-video AI model called DreamFusion. This new tool generates 3D images that can be viewed from any angle, the lighting can be changed, and the model can be placed into any 3D environment — handy for any metaverse building you can imagine.

In the paper, “DreamFusion: Text-to-3D using 2D Diffusion,” researchers explain that existing generative AI have been “driven by diffusion models trained on billions of image-text pairs.” However, adapting this approach to 3D synthesis “would require large-scale datasets of labeled 3D assets and efficient architectures for denoising 3D data, neither of which currently exist.”

Google circumvents these limitations by using a pretrained 2D text-to-image diffusion model to perform text-to-3D synthesis.

After a bit more hocus-pocus behavior, “the resulting 3D model of the given text can be viewed from any angle, relit by arbitrary illumination, or composited into any 3D environment. Our approach requires no 3D training data and no modifications to the image diffusion model, demonstrating the effectiveness of pretrained image diffusion models as priors.”

READ MORE: DreamFusion: Text-to-3D using 2D Diffusion (DreamFusion)

You may think that’s wonderful, but such advances raise ethical questions nonetheless — given the inherent bias of the data sets on which previous AI text-to-speech engines have been built.

“As the technology develops, there are fears it could be harnessed as a powerful tool to create and disseminate misinformation,” warns Heikkilä. “It’s only going to become harder and harder to know what’s real online, and video AI opens up a slew of unique dangers that audio and images don’t, such as the prospect of turbo-charged deepfakes.”

According to researcher Matt Swayne at Pennsylvania State University, AI-generated video could be a powerful tool for misinformation, because people have a greater tendency to believe and share fake videos than fake audio and text versions of the same content.

READ MORE: Video fake news believed more, shared more than text and audio versions (Pennsylvania State University)

For Pheraki, while the videos their model produces are not yet indistinguishable in quality from real ones, “getting to that bar for a specific set of samples is within the realm of possibility.” But before releasing their model, the creators want to get a better understanding of data, prompts, and filtering outputs and measure biases in order to mitigate harms.

The European Union is trying to do something about it. The “AI Liability Directive“ is a new bill and is part of a push from Europe to force AI developers not to release dangerous systems.

According to Heikkilä, the bill will add teeth to the EU’s AI Act, which is set to become law around a similar time. The AI Act would require extra checks for “high risk” uses of AI that have the most potential to harm people. This could include AI systems used for policing, recruitment, or health care.

“It would give people and companies the right to sue for damages when they have been harmed by an AI system — for example, if they can prove that discriminatory AI has been used to disadvantage them as part of a hiring process.”

But there’s a catch: Consumers will have to prove that the company’s AI harmed them, which could be a huge undertaking.

READ MORE: A quick guide to the most important AI law you’ve never heard of (MIT Technology Review)

AI ART — I DON’T KNOW WHAT IT IS BUT I KNOW WHEN I LIKE IT:

Even with AI-powered text-to-image tools like DALL-E 2, Midjourney and Craiyon still in their relative infancy, artificial intelligence and machine learning is already transforming the definition of art — including cinema — in ways no one could have ever predicted. Gain insights into AI’s potential impact on Media & Entertainment in NAB Amplify’s ongoing series of articles examining the latest trends and developments in AI art:

- What Will DALL-E Mean for the Future of Creativity?

- Recognizing Ourselves in AI-Generated Art

- Are AI Art Models for Creativity or Commerce?

- In an AI-Generated World, How Do We Determine the Value of Art?

- Watch This: “The Crow” Beautifully Employs Text-to-Video Generation