A new AI is so good at producing images that it could quickly find a home as part of the production process… but it’s definitely not putting humans out of a job.

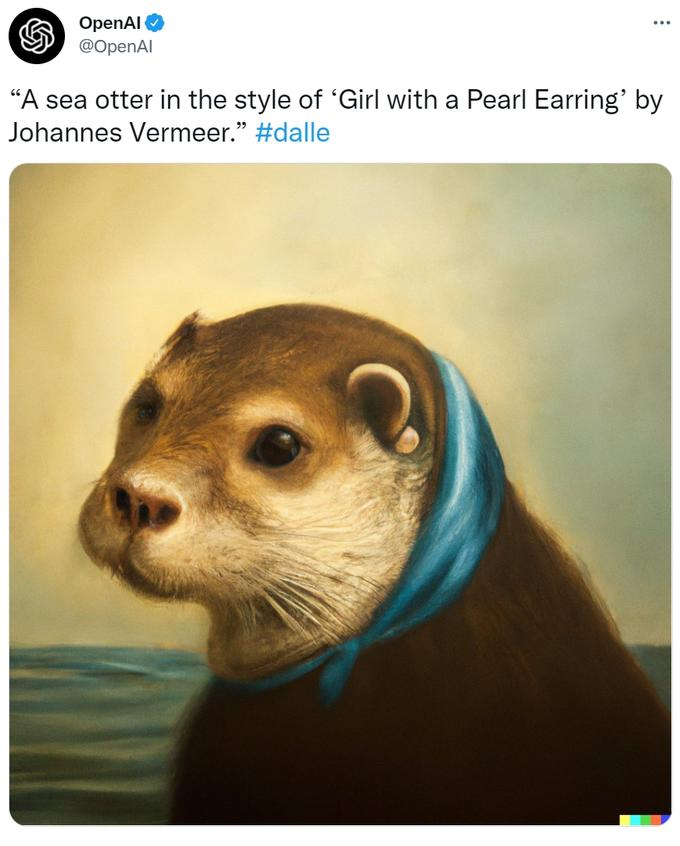

DALL-E 2 is a new neural network algorithm from research lab OpenAI. It hasn’t been released to the public but a small and growing number of people — one thousand a week — have been given private beta access and are raving about it.

“It’s clear that DALL-E — while not without shortcomings — is leaps and bounds ahead of existing image generation technology,” said Aaron Hertzmann at The Conversation.

READ MORE: Give this AI a few words of description and it produces a stunning image – but is it art? (The Conversation)

“It is the most advanced image generation tool I’ve seen to date,” says Casey Newton at The Verge. “DALL-E feels like a breakthrough in the history of consumer tech.”

Visual artist Alan Resnick, another beta tester, tweeted: “Every image in this thread was entirely created by the AI called DALL-E 2 from @OpenAI from simple text prompts. I’ve been using it for about a day and it I feel truly insane.”

By all accounts using DALL-E 2 is child’s play. You simply type in a short phrase into a text box, and it pings back six images in less than a minute.

But instead of being culled from the web, the program creates six brand-new images, each of which reflect some version of the entered phrase. For example, when Hertzmann gave DALL-E 2 the text prompt “cats in devo hats,” it produced 10 images that came in different styles.

As the name suggests, this is the second iteration of the system and has been advanced to generate more realistic and accurate images with four times greater resolution.

DALL-E 2 can create original, realistic images and art from a text description. It can combine concepts, attributes, and styles; make realistic edits to existing images from a natural language caption and it can add and remove elements while taking shadows, reflections, and textures into account.

“It’s staggering that an algorithm can do this,” Hertzmann reflects. “Not all of the images will look pleasing to the eye, nor do they necessarily reflect what you had in mind. But, even with the need to sift through many outputs or try different text prompts, there’s no other existing way to pump out so many great results so quickly — not even by hiring an artist. And, sometimes, the unexpected results are the best.”

How does it work? As explained on OpenAI website, DALL-E 2 has learned the relationship between images and the text used to describe them. It uses a process called “diffusion,” which starts with a pattern of random dots and gradually alters that pattern towards an image when it recognizes specific aspects of that image.

Although there is a debate to be had about whether what the AI produces is art, that almost seems beside the point when DALL-E 2 seems to automate so much of the creative process itself.

It can already create realistic images in seconds, making it a tool that will find a ready use in production. You could imagine its use for rapidly putting together storyboards, or as imagery to sell a pitch where you can quickly visualize characters or locations and just as quickly iterate them.

Technology and media analyst Ben Thompson suggests how DALL-E could be used to create extremely cheap environments and objects in the metaverse.

It’s the potential of such a tool to help a creative artist brainstorm and evolve ideas which is exciting. “When I have something very specific I want to make, DALL-E 2 often can’t do it. The results would require a lot of difficult manual editing afterward. It’s when my goals are vague that the process is most delightful, offering up surprises that lead to new ideas that themselves lead to more ideas and so on.”

The term for this is prompting.

“I would argue that the art, in using a system like DALL-E 2, comes not just from the final text prompt, but in the entire creative process that led to that prompt,” says Hertzmann. “Different artists will follow different processes and end up with different results that reflect their own approaches, skills and obsessions.

READ MORE: DALL-E, the Metaverse, and Zero Marginal Content (Stratechery)

Some artists, like Ryan Murdoch, have advocated for prompt-based image-making to be recognized as art.

Johnny Johnson, who teaches immersive production at the UK’s National Film and TV School’s (NFTS) StoryFutures Academy thinks future versions of AI tech like DALL-E 2 will be capable of making entire feature films with AI-generated scripts and AI generated audio performances alongside the images.

DALL-E 2 will change the industry from production design and concept art right across the board,” he tells NAB Amplify. “New jobs will be created such as Prompt Engineer, who writes the prompt into the AI to generate very specific outputs.”

Naturally, there are alarm bells. No Film School headlines its article “Will Filmmakers Be Needed in the Future?”

“If DALL-E 2’s technology is truly as groundbreaking and revolutionary as advertised, either as it is now or in a future version, who’s to say that clients are going to need the help of filmmakers or video professionals in the future at all?”

NFS continues, “The same could potentially be even more true for graphic designers, 3D animators, and digital artists of any ilk.”

But as The Verge’s Newton observes, DALL-E is hardly sentient. “It seems wrong to describe any of this as ‘creative’ — what we’re looking at here are nothing more than probabilistic guesses — even if they have the [emotional] same effect that looking at something truly creative would.”

READ MORE: How Dall-E Could Power a Creative Revolution (The Verge)

In that sense, AI can also help maintain the creative spark that comes with happy accidents. .

As Hertzmann explains, “When I have something very specific I want to make, DALL-E 2 often can’t do it. The results would require a lot of difficult manual editing afterward. It’s when my goals are vague that the process is most delightful, offering up surprises that lead to new ideas that themselves lead to more ideas and so on.”

No Deepfakes Here

Perhaps stung by accusations of bias in its language model GPT-2, OpenAI (which was founded with in 2015 by investors including Elon Musk) is at pains to “develop and deploy AI responsibly.”

Part of this effort is in opening up DALL-E to select users in order to stress-test its limitations and capabilities and in limiting the AI’s ability to generate violent, hate, or adult images.

It explains, “By removing the most explicit content from the training data, we minimized DALL-E 2’s exposure to these concepts. We also used advanced techniques to prevent photorealistic generations of real individuals’ faces, including those of public figures.”

“When I have something very specific I want to make, DALL-E 2 often can’t do it. The results would require a lot of difficult manual editing afterward. It’s when my goals are vague that the process is most delightful, offering up surprises that lead to new ideas that themselves lead to more ideas and so on.”

— Ben Thompson

For example, type in the keyword “shooting” and it will be blocked, Newton finds. “You’re also not allowed to use it to create images intended to deceive — no deepfakes allowed. And while there’s no prohibition against trying to make images based on public figures, you can’t upload photos of people without their permission, and the technology seems to slightly blur most faces to make it clear that the images have been manipulated.”

As of mid-June, OpenAI hadn’t yet made any decisions about whether or how DALL-E might someday become available more generally. But on September 28, OpenAI announced in a blog post that the waitlist had been removed, opening access to everyone.

“More than 1.5M users are now actively creating over 2M images a day with DALL-E — from artists and creative directors to authors and architects — with over 100K users sharing their creations and feedback in our Discord community,” the company stated.

READ MORE: DALL·E Now Available Without Waitlist (OpenAI)

Artist and software engineer Jim Clyde Monge gives a rundown on what it will cost to use DALL-E 2: “The pricing will remain the same as it has since July when a credit-based system was introduced. Credits can be purchased for $15/115 generations. And in case you’re wondering how many credits you can buy at once, it’s $1,500.”

Even with its limitations, “DALL-E 2 image generator is still a powerful tool that can be used to create some really cool images. I encourage you to check it out and see what you can create,” says Monge.

Want to give DALL-E 2 a try? You can get started HERE.

READ MORE: OpenAI’s Dall-E 2 Is Finally Available To Everyone (Jim Clyde Monge)

But DALL-E 2 isn’t the only text-to-image system advancing this field.

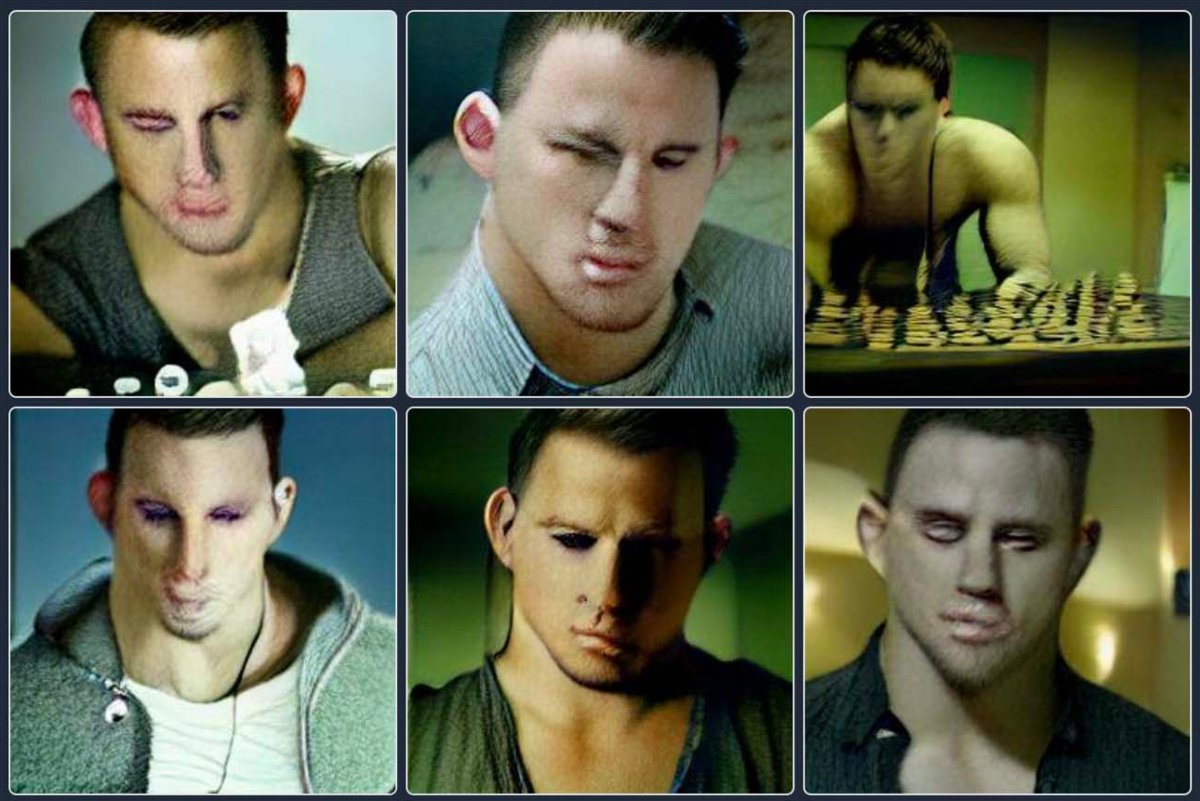

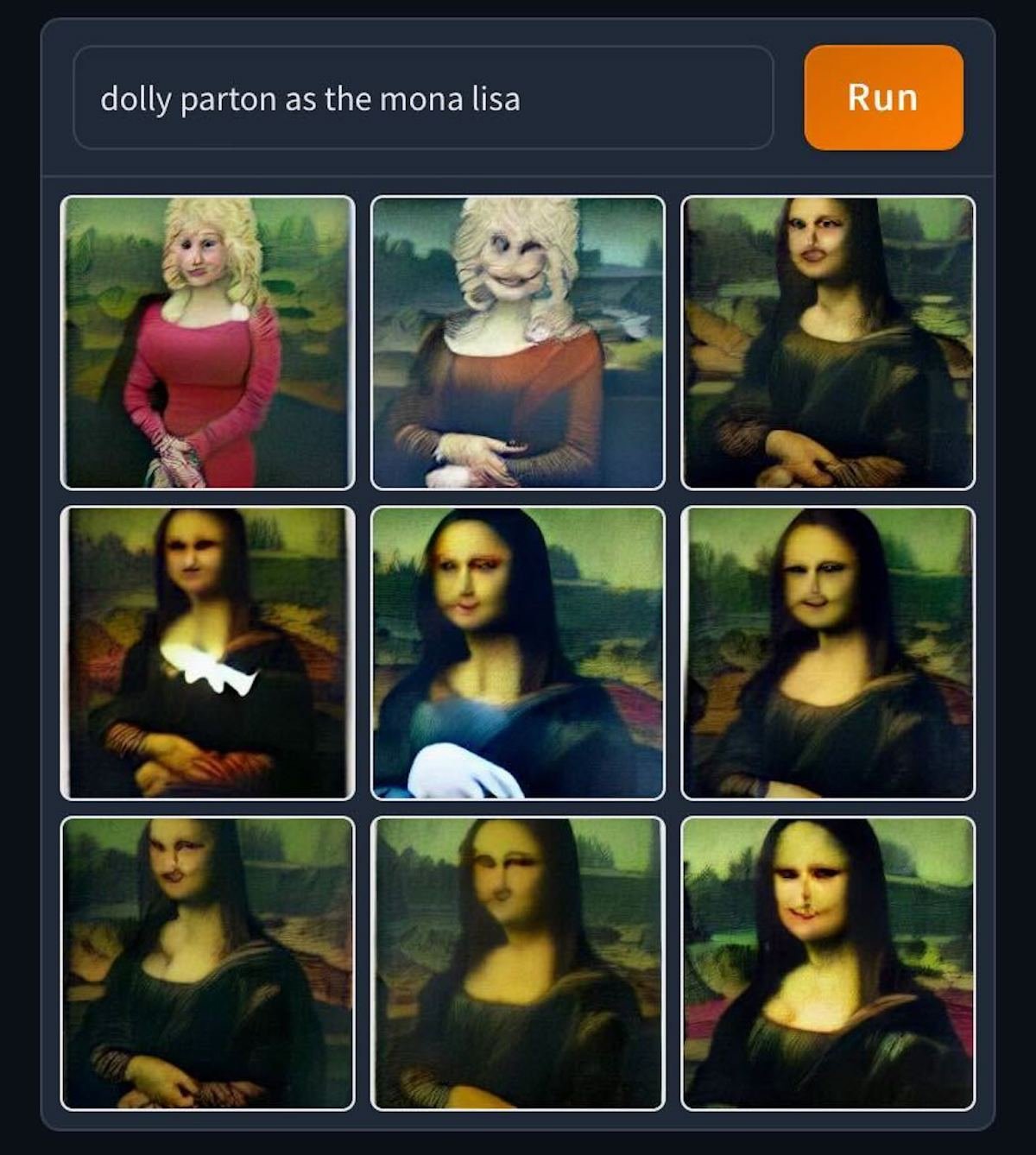

Google has a similar project called Imagen, while HuggingFace has released its own text-to-image engine, called DALL-E mini. This is not to be confused with the original and is no relation. HuggingFace might expect a cease and desist letter in the mail because not only does it use a similar name but the engine doesn’t appear to be anywhere near as good as OpenAI’s.

Looking to generate images of actor Channing Tatum, the AI came back with a set of images Francis Bacon would be proud of. Nonetheless, this technology is coming and will be in use in production faster than you think.

READ MORE: An AI Image Generator Is Going Viral, With Horrific Results (Hyperallergic)

WATCH THIS: John Oliver Details His AI-Generated Cabbage Love Affair (Art)

GAIs and DALL-E: Borrowed or Stolen Art?

By Abby Spessard

READ MORE: Is DALL-E’s art borrowed or stolen? (Engadget)

DALL-E, a portmanteau of WALL-E and Salvador Dalí, is able to create art from just a phrase. But where does this art come from? Engadget’s Daniel Cooper debates whether it’s right that “the AIs of the future are able to produce something magical on the backs of our labor, potentially without our consent or compensation.”

Training GAIs

To create the art, Generative Artificial Intelligences (GAIs) need to train and gain experience. “By experience I mean examining and finding patterns in data,” Ben Hagag, head of research at Darrow, clarifies. An example of this is Google and its use of speech data. “The model understood the patterns, how the language is built, the syntax and even the hidden structure that even linguists find hard to define.” Similarly, DALL-E and other GAIs like it use the same process.

DALL-E Mini, now known as Craiyon, was created by French developer Boris Dayma. He’s open about where the images for training Craiyon came from: “In early models, still in some models, you ask for a picture — for example mountains under the snow, and then on top of it, the Shutterstock or Alamy watermark.” He found himself sorting through a lot of the data the GAI had learned after noticing that.

Using Copyrighted Works

According to a Creative Commons report, “the use of works to train AI should be considered non-infringing by default, assuming that access to the copyright works was lawful at the point of input.” Cooper points out that “the legal situation is not a particularly clear one, especially not in the US, where there have been few cases covering Text and Data Mining, or TDM.

“In the US, TDM is broadly covered by Fair Use, which permits various forms of copying and scanning for the purposes of allowing access. This isn’t, however, a settled subject, but there is one case that people believe sets enough of a precedent to enable the practice.

“Authors Guild v. Google (2015) was brought by a body representing authors, which accused Google of digitizing printed works that were still held under copyright,” he says. While the initial purpose of the work was to catalog and database texts to make research easier, Cooper explains that the authors were concerned Google was violating copyright. While the text may not have been available publicly, Google should not be allowed to scan and store it. Google won the case.

What does this mean for DALL-E? “Until there is a solid case, it’s likely that courts will apply the usual tests for copyright infringement.”

Trained with Our Data

“Artificial intelligence isn’t simply a toy, or an interesting research project, but something that has already caused plenty of harm.” Clearview AI, for example, was a company that claimed to have a fairly comprehensive image recognition database. “This technology was used by billionaire John Catsimatidis to identify his daughter’s boyfriend,” Cooper notes. “Clearview has offered access not just to law enforcement — its supposed corporate goal — but to a number of figures associated with the far right.” And not only that, but the system has shown to be less than reliable, leading to “a number of wrongful arrests.”

Cooper advises that “it would be a mistake to suggest that automating image generation will inevitably lead to the collapse of civilization. But it’s worth being cautious about the effects on artists, who may find themselves without a living if it’s easier to commission a GAI to produce something for you.”

How Does Generative AI Work?

By Abby Spessard

READ MORE: How do DALL-E, Midjourney, Stable Diffusion, and other forms of generative AI work? (Big Think)

Generative AI is taking the tech world by storm even as the debate about AI art rages on. “Meaningful pictures are assembled from meaningless noise,” Tom Hartsfield, writing at Big Think, summarizes the current situation.

The generative model programs that power the likes of DALL-E, Midjourney and Stable Diffusion can create images almost “eerily like the work of a real person.” But do AIs truly function like a person, Hartsfield asks, and is it accurate to think of them as intelligent?

“Generative Pre-trained Transformer 3 (GPT-3) is the bleeding edge of AI technology,” he notes. Developed by OpenAI and licensed to Microsoft, GPT-3 was built to produce words. However, OpenAI adapted a version of GPT-3 to create DALL-E and DALL-E 2 through the use of diffusion modeling.

Diffusion modeling is a two-step process where AIs “ruin images, then they try to rebuild them,” as Hartsfield explains. “In the ruining sequence, each step slightly alters the image handed to it by the previous step, adding random noise in the form of scattershot meaningless pixels, then handing it off to the next step. Repeated, over and over, this causes the original image to gradually fade into static and its meaning to disappear.

“When this process is finished, the model runs it in reverse. Starting with the nearly meaningless noise, it pushes the image back through the series of sequential steps, this time attempting to reduce noise and bring back meaning.”

While the destructive part of the process is primarily mechanical, returning the image to lucidity is where training comes in. “Hundreds of billions of parameters,” including associations between images and words, are adjusted during the reverse process.

The DALL-E creators trained their model “on a giant swath of pictures, with associated meanings, culled from all over the web.” This enormous collection of data is partially why Hartsfield says DALL-E isn’t actually very much like a person at all. “Humans don’t learn or create in this way. We don’t take in sensory data of the world and then reduce it to random noise; we also don’t create new things by starting with total randomness and then de-noising it.”

Does that mean generative AI isn’t intelligent in some other way? “A better intuitive understanding of current generative model AI programs may be to think of them as extraordinarily capable idiot mimics,” Hartsfield clarifies.

As an analogy, Hartsfield compares DALL-E to an artist, “who lives his whole life in a gray, windowless room. You show him millions of landscape paintings with the names of the colors and subjects attached. Then you give him paint with color labels and ask him to match the colors and to make patterns statistically mimicking the subject labels. He makes millions of random paintings, comparing each one to a real landscape, and then alters his technique until they start to look realistic. However, he could not tell you one thing about what a real landscape is.”

Whatever your stance is on generative AI, we’ve landed in a new era, one in which computers can generate fake images and text that are extremely convincing. “While the machinations are lifeless, the result looks like something more. We’ll see whether DALL-E and other generative models evolve into something with a deeper sort of intelligence, or if they can only be the world’s greatest idiot mimics.”