TL;DR

- “Oppenheimer” offers lessons on the “unintended consequences” of technology, says director Christopher Nolan.

- Emerging tech including quantum computing, robotics, blockchain, VR and AI are all black boxes with potentially catastrophic consequences if we don’t build in ethical guardrails.

- Leaders need to understand that developing a digital ethical risk strategy is well within their capabilities and management should not shy away.

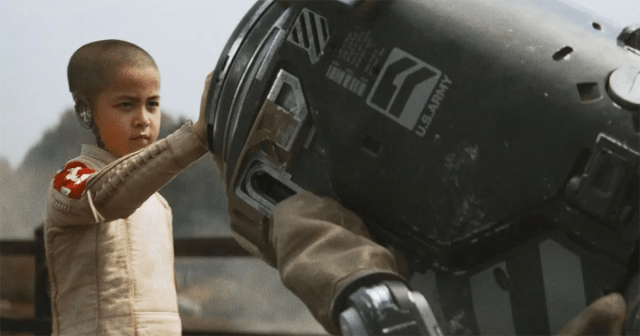

Beware of what we create, might be the message from Oppenheimer, on the face of it a film about the invention of the atomic bomb, but with obvious parallels to today.

Director Christopher Nolan might have had in mind the nascent cold war when he began the project but since then the Russia invasion of Ukraine and the rise of AI has given his film added resonance.

“When I talk to the leading researchers in the field of AI right now, they literally refer to this right now as their Oppenheimer moment,” Nolan said during a panel discussion of physicists moderated by NBC News’s Chuck Todd. “They’re looking to his story to say, okay, what are the responsibilities for scientists developing new technologies that may have unintended consequence?

“I’m not saying that Oppenheimer’s story offers any easy answers to those questions, but at least can serve as a cautionary tale.”

Nolan explains that Oppenheimer is an attempt to understand what it must have been like for those few people in charge to have developed such extraordinary power and then to realize ultimately what they had done. The film does not pretend to offer any easy answers.

“I mean, the reality is, as a filmmaker, I don’t have to offer the answers,” he said. “I just get to ask the most interesting questions. But I do think there’s tremendous value in that if it can resonate with the audience.”

Asked by Todd what he hoped Silicon Valley might learn from the film, Nolan replied, “I think what I would want them to take away is the concept of accountability. When you innovate through technology, you have to make sure there is accountability.

“The rise of companies over the last 15 years bandying about words like ‘algorithm,’ not knowing what they mean in any kind of meaningful, mathematical sense. They just don’t want to take responsibility for what that algorithm does.”

There has to be accountability, he emphasized. “We have to hold people accountable for what they do with the tools that they have.”

Nolan was making comparisons between nuclear Armageddon and AI’s potential for species extinction, but he is not alone in calling out big tech to be place the needs of society above their own greed.

In an essay for Harvard Business Review, Reid Blackman asks how we can avoid the ethical nightmares of emerging tech including blockchain, robotics, gene editing and VR.

“While generative AI has our attention right now, other technologies coming down the pike promise to be just as disruptive. Augmented and virtual reality, and too many others have the potential to reshape the world for good or ill,” he writes.

Ethical nightmares include discrimination against tens of thousands of people; tricking people into giving up all their money; misrepresenting truth to distort democracy or systematically violating people’s privacy. The environmental cost of the massive computing power required for data-driven tech is among countless other use-case-specific risks.

Reid has some suggestions as to how to approach these dilemmas — but it is up to tech firms that develop the technologies to address them.

“How do we develop, apply, and monitor them in ways that avoid worst-case scenarios? How do we design and deploy [tech] in a way that keeps people safe?”

It is not technologists, data scientists, engineers, coders, or mathematicians that need to take heed, but the business leaders who are ultimately responsible for this work, he says

“Leaders need to articulate their worst-case scenarios — their ethical nightmares — and explain how they will prevent them.”

Reid examines a few emerging tech nightmares. Quantum computers, for example, “throw gasoline on a problem we see in machine learning: the problem of unexplainable, or black box, AI.

“Essentially, in many cases, we don’t know why an AI tool makes the predictions that it does. Quantum computing makes black box models truly impenetrable.”

Today, data scientists can offer explanations of an AI’s outputs that are simplified representations of what’s actually going on. But at some point, simplification becomes distortion. And because quantum computers can process trillions of data points, boiling that process down to an explanation we can understand — while retaining confidence that the explanation is more or less true — “becomes vanishingly difficult,” Reid says.

“That leads to a litany of ethical questions: Under what conditions can we trust the outputs of a (quantum) black box model? What do we do if the system appears to be broken or is acting very strangely? Do we acquiesce to the inscrutable outputs of the machine that has proven reliable previously?”

What about an inscrutable or unaccountable blockchain? Having all of our data and money tracked on an immutable digital record is being advocated as a good thing. But what if it is not?

“Just like any other kind of management, the quality of a blockchain’s governance depends on answering a string of important questions. For example: What data belongs on the blockchain, and what doesn’t? Who decides what goes on? Who monitors? What’s the protocol if an error is found in the code of the blockchain? How are voting rights and power distributed?”

Bottom line: Bad governance in blockchain can lead to nightmare scenarios, like people losing their savings, having information about themselves disclosed against their wills, or false information loaded onto people’s asset pages that enables deception and fraud.

Ok, we get the picture. Tech out of control is bad. We should be putting pressure on the leaders of the largest tech companies to answer some hard (ethical) questions, such as:

- Is using a black box model acceptable?

- Is the chatbot engaging in ethically unacceptable manipulation of users?

- Is the governance of this blockchain fair, reasonable, and robust?

- Is this AR content appropriate for the intended audience?

- Is this our organization’s responsibility or is it the user’s or the government’s?

- Might this erode confidence in democracy when used or abused at scale?

- Is this inhumane?

Reid insists: “These aren’t technical questions — they’re ethical, qualitative ones. They are exactly the kinds of problems that business leaders — guided by relevant subject matter experts — are charged with answering.”

It’s understandable that leaders might find this task daunting, but there’s no question that they’re the ones responsible, he argues. Most employees and consumers want organizations to have a digital ethical risk strategy.

“Leaders need to understand that developing a digital ethical risk strategy is well within their capabilities. Management should not shy away.”

But what or who is going to force them to do this? Boiling it down — do you trust Elon Musk or Mark Zuckerberg, Jeff Bezos or the less well known chief execs at Microsoft, Google, OpenAI, Nvidia and Apple — let alone developing similar tech in China or Russia — to do the right thing but us all?

Discussion

Responses (1)