TL;DR

- There is growing public concern about the role of artificial intelligence in daily life, finds a new Pew Research report.

- Experts fear that human knowledge will be lost or neglected in a sea of disinformation, and that people’s cognitive skills will decline.

- There is hope that humans will come out on the right side of the human+tech future of evolution, but the time to embed those human values, checks and balances on AI, is now.

READ MORE: As AI Spreads, Experts Predict the Best and Worst Changes in Digital Life by 2035 (Pew Research Center)

A growing share of Americans are worried about the role artificial intelligence is playing in daily life — and they have every right to be concerned, according to two new studies from Pew Research Center.

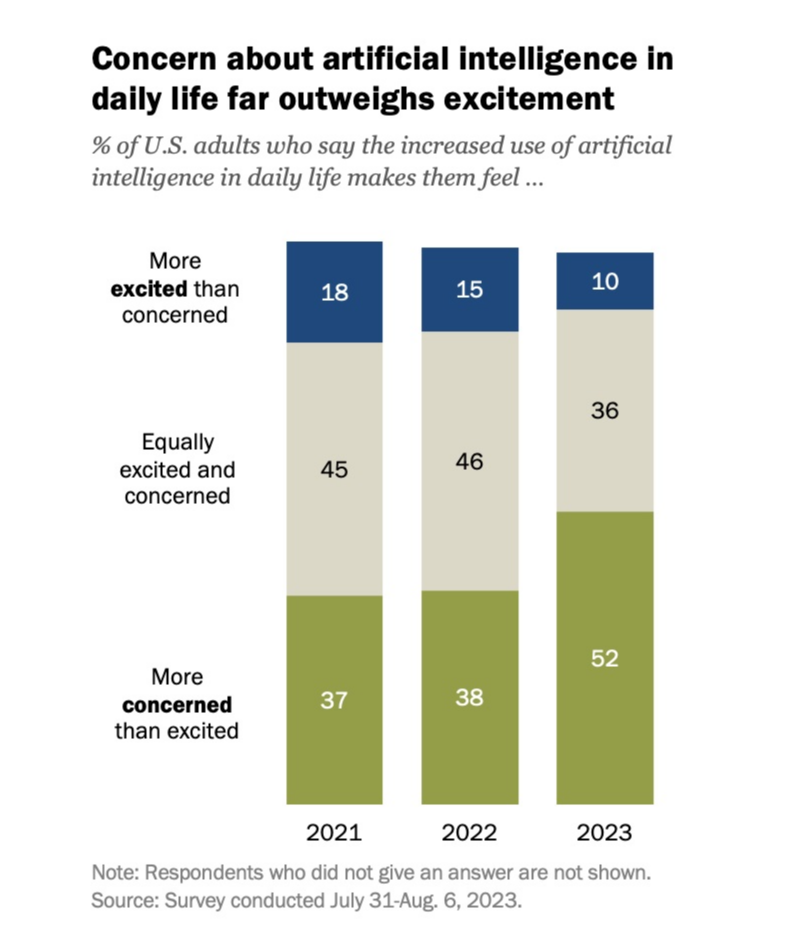

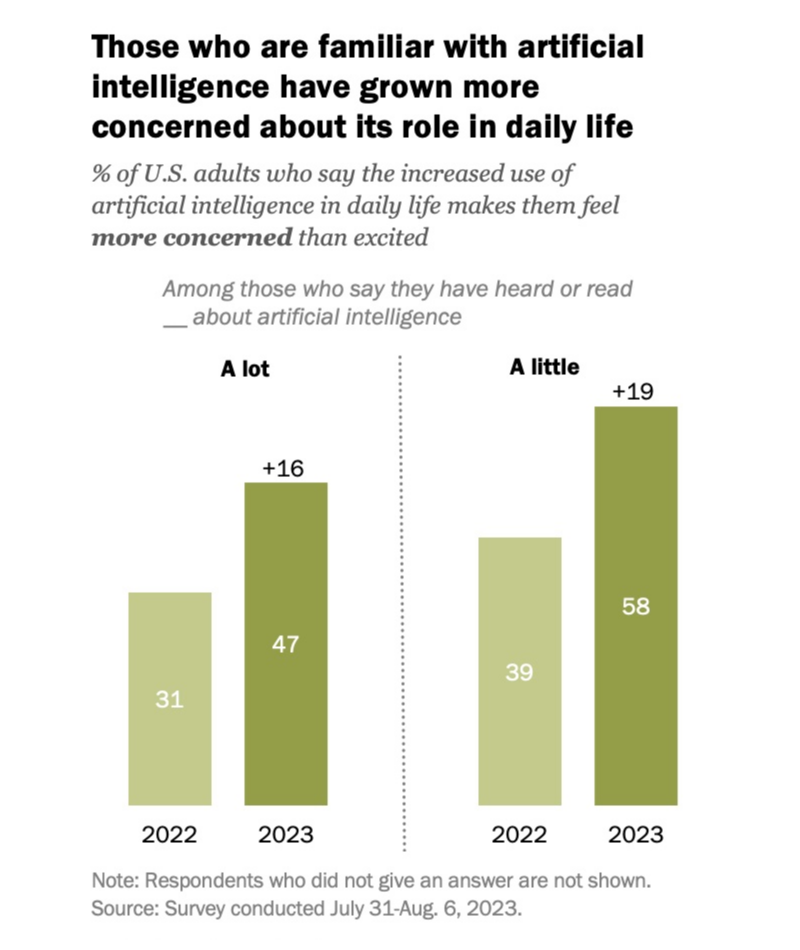

More than half of United States citizens say they feel more concerned than excited about the increased use of AI, according to Pew’s poll of 11,201 US adults, which ran from July 31 to August 6, 2023. That’s up by 14 points since the last such survey in December.

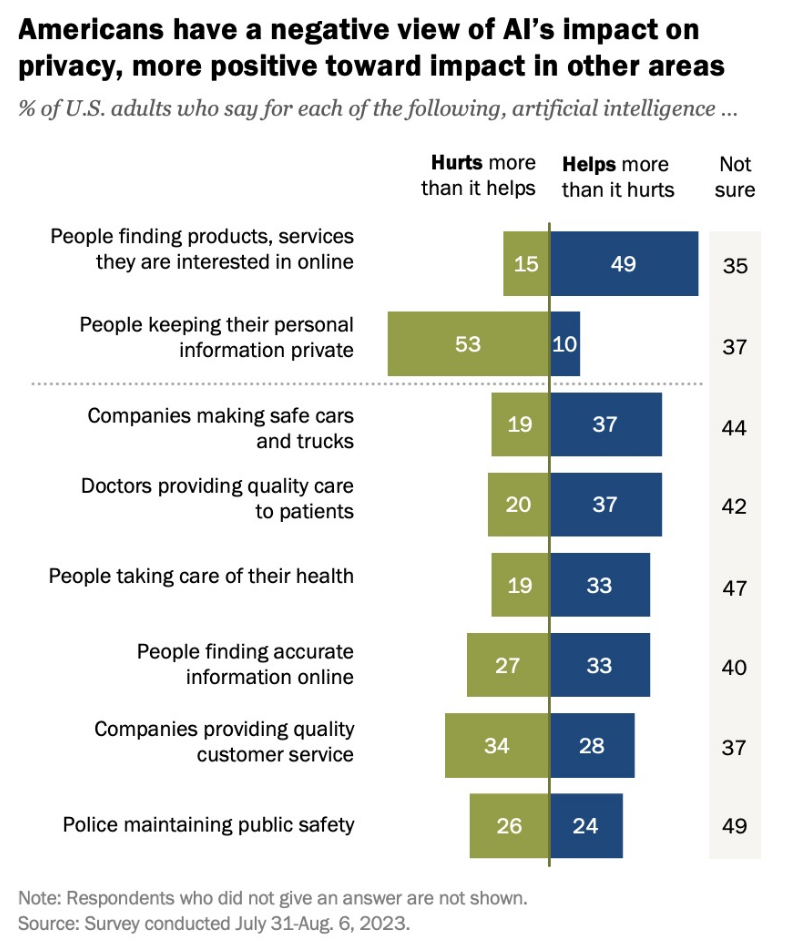

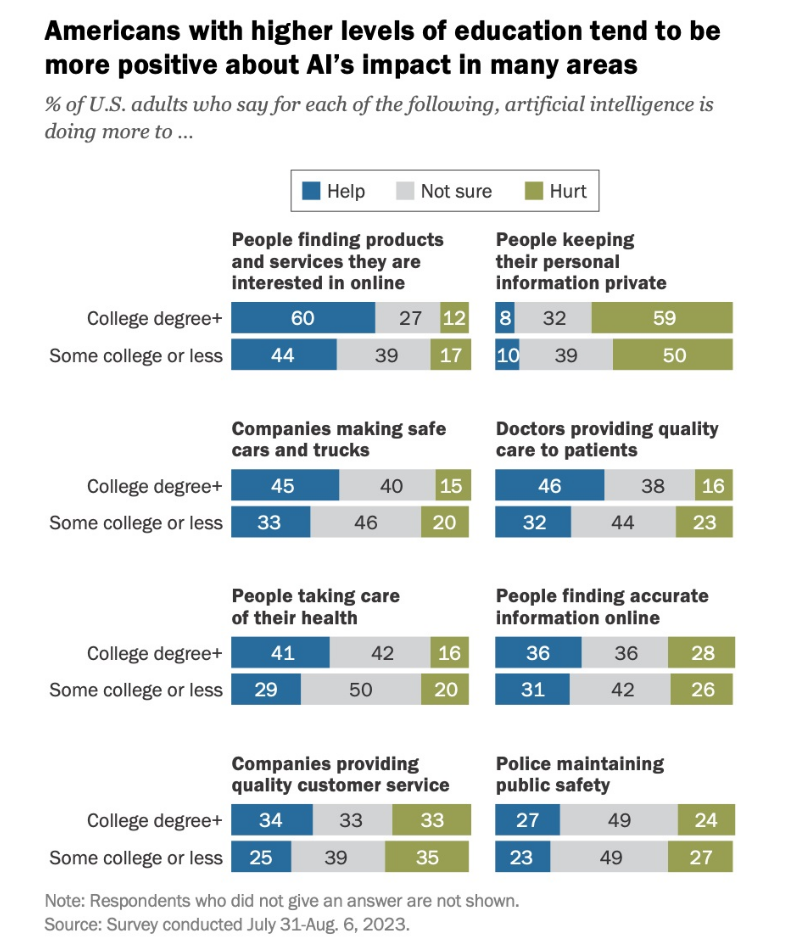

For instance, 53% of Americans say AI is doing more to hurt than help people keep their personal information private. The survey supports previous Pew analyses, which found public concerns about a desire to maintain human control over AI and a caution over the pace of AI adoption in fields like health care.

Although AI’s impact remains vague in general public consciousness, concern has reached fever pitch among academia, policy makers and scientific communities where there are deep concerns about people’s and society’s overall well-being. They also expect great benefits in health care, scientific advances and education — with 42% of these experts saying they are equally excited and concerned about the changes in the “humans+tech” evolution they expect to see by 2035.

In sum, the 350 experts canvassed by the Pew Research Center have great expectations for digital advances across many aspects of life by 2035 but all make the point that humans’ choices to use technologies for good or ill will change the world significantly.

Andy Opel, professor of communications at Florida State University, frames the dichotomy in comments for the report.

“We are on the precipice of a new economic order made possible by the power, transparency and ubiquity of AI. Whether we are able to harness the new power of emerging digital tools in service to humanity is an open question.”

We’re on the cusp of making those decisions right now.

The experts who addressed this fear wrote about their concern that digital systems will continue to be driven by profit incentives in economics and power incentives in politics. They said this is likely to lead to data collection aimed at controlling people rather than empowering them to act freely, share ideas and protest injuries and injustices. They worry that ethical design will continue to be an afterthought and digital systems will continue to be released before being thoroughly tested. They believe the impact of all of this is likely to increase inequality and compromise democratic systems.

A topmost concern is the expectation that increasingly sophisticated AI is likely to lead to the loss of jobs, resulting in a rise in poverty and the diminishment of human dignity.

Reliance on AI for the automation of story creation and distribution through AI poses “pronounced labor equality issues as corporations seek cost-benefits for creative content and content moderation on platforms,” says Aymar Jean Christian, associate professor of communication studies at Northwestern University in the report.

“These AI systems have been trained on the un- or under-compensated labor of artists, journalists and everyday people, many of them underpaid labor outsourced by US-based companies. These sources may not be representative of global culture or hold the ideals of equality and justice. Their automation poses severe risks for US and global culture and politics.”

Several commentators look for the positives of digital advances and in particular point to the democratizing and socially levelling effect that this might have.

“The development of digital systems that are credible, secure, low-cost and user-friendly will inspire all kinds of innovations and job opportunities,” says Mary Chayko, professor of communication and information at Rutgers University. “If we have these types of networks and use them to their fullest advantage, we will have the means and the tools to shape the kind of society we want to live in.”

Yet Chayko has her doubts. “Unfortunately, the commodification of human thought and experience online will accelerate as we approach 2035,” she predicts. “Technology is already used not only to harvest, appropriate and sell our data, but also to manufacture and market data that simulates the human experience, as with applications of artificial intelligence. This has the potential to degrade and diminish the specialness of being human, even as it makes some humans very rich.”

Herb Lin, senior research scholar for cyber policy and security at Stanford University’s Center for International Security and Cooperation writes, “My best hope is that human wisdom and willingness to act will not lag so much that they are unable to respond effectively to the worst of the new challenges accompanying innovation in digital life. The worst likely outcome is that humans will develop too much trust and faith in the utility of the applications of digital life and become ever more confused between what they want and what they need.”

David Clark, senior research scientist at MIT’s Computer Science and AI Laboratory says that to hold an optimistic view of the future you must imagine several potential positives to come about.

These include the emergence of a new generation of social media, with less focus on user profiling to sell ads, less emphasis on unrestrained virality, and more of a focus on user-driven exploration and interconnection.

“The best thing that could happen is that app providers move away from the advertising-based revenue model and establish an expectation that users actually pay,” he asserts. “This would remove many of the distorting incentives that plague the ‘free’ Internet experience today. Consumers today already pay for content (movies, sports and games, in-game purchases and the like). It is not necessary that the troublesome advertising-based financial model should dominate.”

A whole section is devoted to expert thoughts on the impact of ChatGPT and other generative AI engines.

Author Jonathan Taplin, echoes many in the creative community, “The notion that AI (DALL-E, GPT 3) will create great ORIGINAL art is nonsense. These programs assume that all the possible ideas are already contained in the data sets, and that thinking merely consists of recombining them. Our culture is already crammed with sequels and knockoffs. AI would just exacerbate the problem.”