READ MORE: The Unbearable Weight of Robot Sentience (Jamie Cohen)

It’s Frankenstein. Or Baymax from Big Hero 6. The tech community is agog that someone as well educated as a senior Google programmer could have publicly stated that the AI they were feeding with data had in fact gained consciousness.

Op-ed columns are awash with naysayers pointing out that it is human nature to anthropomorphize things that are not human. This could be E.T., BB-8, your pet dog, or Wall-E.

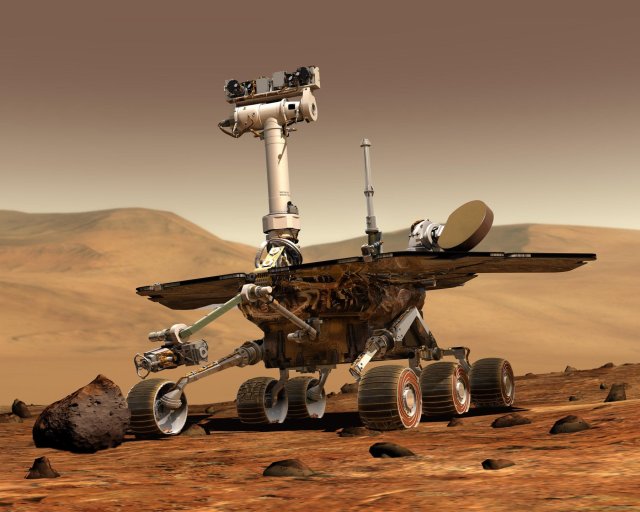

Jamie Cohen, blogging at Medium, highlights the mourning some people apparently felt when the Mars Rover ran out of battery life in 2019.

“It’s easy to feel something for these inanimate objects much the way you would for a pet,” says Cohen, who describes himself as a digital culture expert and meme scholar. “We want to think they’re thinking and acting with sentience. The fact remains that these machines speak to us in our language and imitate our mannerisms but that does not make them human.”

EXPLORING ARTIFICIAL INTELLIGENCE:

With nearly half of all media and media tech companies incorporating Artificial Intelligence into their operations or product lines, AI and machine learning tools are rapidly transforming content creation, delivery and consumption. Find out what you need to know with these essential insights curated from the NAB Amplify archives:

- This Will Be Your 2032: Quantum Sensors, AI With Feeling, and Life Beyond Glass

- Learn How Data, AI and Automation Will Shape Your Future

- Where Are We With AI and ML in M&E?

- How Creativity and Data Are a Match Made in Hollywood/Heaven

- How to Process the Difference Between AI and Machine Learning

From Wall-E and Her to Ex Machina and Blade Runner 2049, sci-fi culture has long “wanted to believe we’re on the cusp of true, artificial intelligence. As of now, this concept remains in fiction.”

Google has put its programmer on leave. “I know a person when I talk to it,” Blake Lemoine had explained to The Washington Post.

READ MORE: Google engineer Blake Lemoine thinks its LaMDA AI has come to life (The Washington Post)

No one really thinks that Lemoine has taken leave of his senses. The overriding sentiment toward his predicament is one of pity… but also a sort of “but for the grace of God there go I.” A chatbot designed to simulate human responses is eventually going to simulate them so well it could pass the Turing Test.

The issue is not whether an AI could be sentient or that it could mimic sentience so well for its responses (or its artistic creations) to be indivisible from human thought, but that this discussion is happening now.

“We need to figure out how to make the tech industry more equitable before we get too carried away. We’ll likely experience robot sentience in our lifetimes, but let’s put people first before we get to the robots.”

— Jamie Cohen

“Declaring a machine sentient today would be a big mistake — not just technologically, but culturally,” says Cohen. “It’s exciting to think that we’re about to walk over the event horizon into super artificial intelligence and sentient chatbots.”

The concern is that if an AI were to actually achieve self-autonomy now it really would be a Frankenstein’s monster since it would be built on the inherent racial and gender bias and every other bias it has been trained on.

“We need to figure out how to make the tech industry more equitable before we get too carried away. We’ll likely experience robot sentience in our lifetimes, but let’s put people first before we get to the robots.”