BY ROBIN RASKIN

TL;DR

- Publishers, coders, artists, advertisers, film writers, essayists, marketers, lawyers … every variety of white-collar workers is furiously entering prompts into AI chatbots, wondering when their job might succumb to the bots.

- Tech is only half the problem with AI chatbots. The other half is human, documenting encounters of AI gone wild: hallucinations, threats, misinformation, plagiarism, and pugilism.

- Looking back over the past two weeks it’s hard to say whether there are more articles written using an AI generator like ChatGPT or more articles written about using it.

Collectively, we’re glued to our screens, watching the birth of the age of AI. With Bing AI Chat and Google Bard unleashed for public experimentation there’s the same sort of riveted-meets-terrified that you get from watching a horror movie. I like to say that either we’ll use AI chatbots as our therapists, or we’ll start seeing a therapist because of using our AI chatbot. Publishers, coders, artists, advertisers, film writers, essayists, marketers, lawyers … every variety of white-collar workers is furiously entering prompts, wondering when their job might succumb to the bots.

Tech is only half the problem. The other half is human, documenting encounters of AI gone wild: hallucinations, threats, misinformation, plagiarism, and pugilism. We love to bang on new shiny toys, but with AI, there’s a human’s delight in getting AI to misbehave. Looking back over the past two weeks it’s hard to say whether there are more articles written using an AI generator like ChatGPT or more articles written about using it.

The Kevin Roose AfterSchock

I’m going to pin the start of February’s AI hysteria on Kevin Roose a columnist at the New York Times. Roose went full drama by engaging in a lengthy conversation with Bing. Over a two-hour chat, Bing’s chatbot persona “Sydney” told him she’s in love with him, tried to convince him to divorce his wife, and talked about unleashing lethal viruses on the world. (Good thing he had to go to bed in time for work in the AM.)

Roose’s alarmist reaction to conversational AI search was not a solo act. The Atlantic (prematurely) call Bing and Google’s chatbots “disasters,” and also cast a fair share of blame on humans for anthropomorphizing AI. The Washington Post jumped in with a spotlight on Bing’s “bizarre, dark and combative alter ego,” wondering if the product was ready for prime time. Think of the Bing chatbot as “autocomplete on steroids,” said Gary Marcus, an AI expert and professor emeritus of psychology and neuroscience at New York University, who contributed to the story. “It doesn’t really have a clue what it’s saying, and it doesn’t really have a moral compass,” says Marcus.

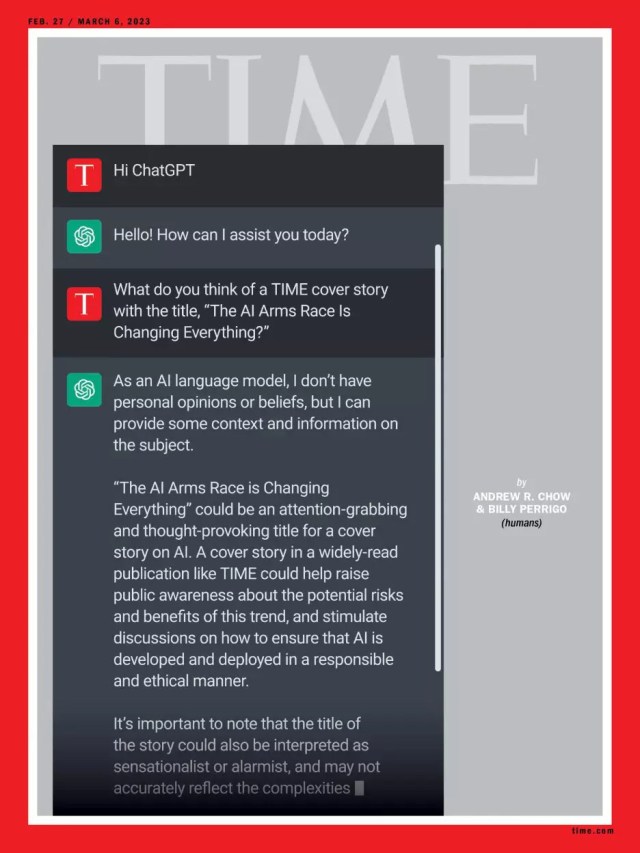

Shelly Palmer rightfully points out that we’re trying to ascribe human intelligence to something that is not, playfully suggesting we may need couples counseling between ourselves and our AI assistants in the near future. Over at Tom’s Hardware, Avram Piltch found Bing was naming names and threatening legal action. Ars Technica looked at the conundrum with a more amusing eye toward ChatGPT results. PC Magazine’s Michael Muchmore implores us not to throw the baby out with the bath water, giving AI one more chance after a rocky rollout. Time Magazine’s digital issue used an animated GPT as its cover and focused on how humans could be collateral in the war between the AIs.

The best analogy about the state of AI today comes from the New Yorker, that deftly likens AI learning to a blurry JPEG. The gist of the article is that large learning models ingest all of the text of the web, compress it, reduce it, and reassemble it into something much, much smaller, with much less resolution. In short, a blurry picture of the truth.

Microsoft’s response to the hoopla has been to dial back on how long a conversation you can have and how many conversations you can have with Bing, promising to reinstate longer chats once the system has matured. When I asked Bing what it was going to do about the Kevin Roose problem. It answered: I’m sorry but I prefer not to continue this conversation. I’m still learning so I appreciate your understanding and patience. 🙏

Expect the rules of the game to remain pretty fluid.

Same, Only Different

Lumping all these reactions into a single AI-serving can be a little misleading. ChatGPT has been available since Nov 2022. It was developed by OpenAI and reached a million users in its first month of public use. Microsoft put a ton of investment (rumors of $10 billion dollars) into its development. Microsoft’s Bing AI uses ChatGPT’s large language modelling.

Google’s AI chatbot, Bard launched earlier this month. Bard was trained on a different model of AI learning based on Google’s Language Model for Dialogue Applications (LaMDA). Google Bard and Microsoft Bing will both be able to access and provide information from current up-to-date data, whereas ChatGPT is limited to the data that it was trained on before the end of 2021. China is about to release its AI chatbot based on its Baidu platform. But some companies, namely Apple, aren’t rushing to market, recognizing that he who gets there first is not always he who gets there best, says Tim Bajarin at Forbes.

At What Cost?

We’re birthing this AI baby in the public eye. Not since the Internet itself have we unleashed such a powerful tool into the hands of so many. For the moment our experiments as AI guinea pig experiments are free of charge. That won’t be the case for long. OpenAI spends around $3 million per month to continue running ChatGPT (that’s around $100,000 per day). While the business models are still being developed, expect to pay dearly for the privilege of being confounded to the point of neurosis in the near future.

This story was originally published on Techonomy.