COVID-19 has turned the practice of film and television production upside down but at the same time has focused creatives everywhere to push their equipment and solutions suppliers to introduce products and services that might previously have been bullet points on a road-map wish list.

There are some obvious winners as a result of the crisis. Remote working had been around for a while but is now more a necessity than a trend as it was pre-2020. Companies like Zoic Studios in the U.S. and Canada and Jellyfish Pictures in the UK had already invested in solutions like Teradici’s PCoIP. In fact, that company recently won an Engineering Emmy Award “for accommodating the needs of content creators to work remotely.”

The rise of working in the cloud had been swift but is now meteoric in the shadow of the pandemic. Companies like PacketFabric specialize in building private networks with low-cost transfer packages for building networks to data centers. This is data as a service using companies like AWS, Google Cloud, and Microsoft Azure, a company that was lagging behind the frontrunners but has caught up — they have just racked up a quarterly profit of $13.9 billion, a 30% increase year-on-year. (Microsoft’s other Pandemic-beating software, Teams, has grown from 32 million daily users last March to 115 million daily users recently).

Dave Ward, CEO of Packet Fabric, witnessed the speed of change for cloud services in the creative world: “Through the entire shift of the employee workforce now being remote, the fast forward button has been hit on taking all of creative, whether that’s capture or all the way through post production to distribution into the cloud.” Overall, one of the common goals for creatives was to get a turnkey solution given the time constraint involved.

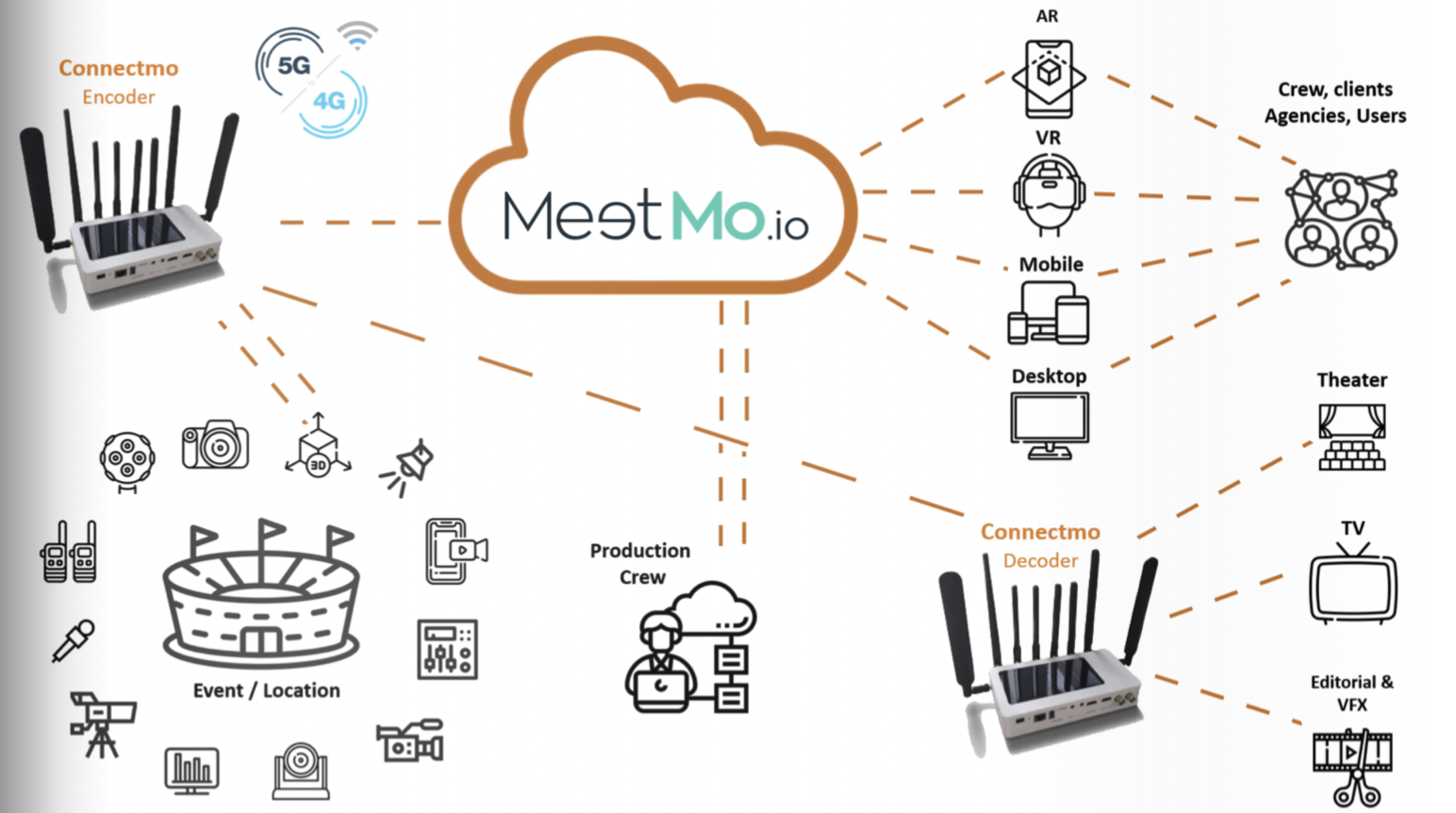

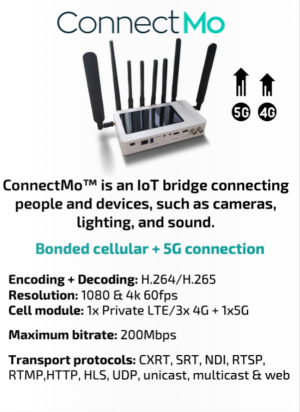

Ward is envisioning the virtualization of every part of production, which is a huge challenge, but with a top-down approach as only the biggest productions would be able to incorporate such a system in the near future. But that integration of all of the production’s threads into a virtual and remote world is underway and there are already products available that are designed to encapsulate and control these disparate ingredients. MeetMo.io is a new product from Radiant Images that has its own streaming hardware element called ConnectMo. MeetMo is remote virtual production, but it’s probably best to see it as Zoom and Teams on steroids.

It enables collaboration with your production team with the added benefit of control of equipment, cameras, lighting, remote heads, etc. You can access menus and change settings as if you were there, even 360 cameras. Your clients are included too, with collaboration options where you can draw and write on documents for immediate consultation. The hardware element, ConnectMo, is an encoder/decoder product that is the physical IoT bridge connecting the parts of the MeetMo app. It uses bonded cellular with a 5G connection and H.264 and H.265 encoding and 1080 and 4K up to 60fps. The maximum bitrate at the moment is 200Mbps. It’s also bi-directional so you can send a signal and receive control.

Subscriptions for the whole package go from the “Freelancer” option of $79.95 a month, which gives you 10GB of outbound traffic with a maximum of six users to the “Studio” option at $849.95 a month, which includes 2TB of outbound traffic, 24 users, 12 devices and the 4K option. MeetMo calls the product a “virtual video village” and it could be the start of the appearance of similar super-collaboration virtual tools.

There are already other solutions which could expand to wholesale virtualization. For instance, there is Frame IO, which already runs a highly engineered video collaboration tool. Michael Cioni, VP of technology, has talked about bringing the company closer to set with certain camera-to-cloud, or camera to the cut-type of conversations. “A robust camera-to-cloud approach means filmmakers will have greater access to their work, greater control of their content and greater speed with which to make key decisions,” says Cioni. “Our new roadmap will dramatically reduce the time it takes to get original camera negative into the hands of editors. Directors, cinematographers, post-houses, DITs and editors will all be able to work with recorded images in real time, regardless of location.” Pix, now part of X2X Media Group with Codex, the high-end recording and encoding system, has offered its online view and approval system for footage for a while, with security at the heart of the offering.

A recent proof of concept product saw a Codex server placed on an active set that is able to send footage to Pix customers every time a camera gets cut. A Pix user with the right credentials can then immediately start sharing and collaborating with the content, including perhaps a dailies colorist who would be able to apply a color profile. The camera originals are sent to the cloud at the same time. Marc Dando, X2X’s CDO, says the system’s abilities can even be extended: “Also, if you wanted, you could be doing a turnover for VFX within about ten minutes.”

Without too much imagination, the essence of a fully virtualized production system is there to see. Perhaps a culmination of Frame IO, Pix and MeetMo could be a template of what is to come. Michael Mansouri of Radiant Images, the parent company of MeetMo, fully explains his vision: “MeetMo is a remote collaboration platform and the studios were hungry for it. It does a lot because when you collaborate you need a lot of tools. But this is very simple and not drowned in functions and features,” he says. “After the Pandemic hit, Hollywood had to re-think itself and the conclusion was that everything had to be virtualized; the infrastructure, the networks so you could send your production anywhere with just a click. We were looking beyond the use of a broadcast truck with satellite uplink. You could be anywhere in the world, you just needed a web link. You could then control devices anywhere on the network.”

The device control is deep and goes beyond changing frame rates and shutter angles using menus. MeetMo wants to allow you to use your voice to do it, especially if you’re trying to change parameter– for instance to a Log-C setting on a camera, which could be a seven menu and submenu step action (12 on a Sony Venice camera). MeetMo would also allow you to see what has been shot within a “volume” or 3D file sent back to you without downloading any new software. There will also be gesture control available at some point.

The world beyond the output of the camera is changing, but what about the capture world itself? For most single camera productions in the shadow of the pandemic, that’s staying roughly the same except for maybe changing prime lenses to zooms to further diminish the crew levels and the use of remote heads to keep actors safe from any COVID-19 transmission. As a result, schedules can be squeezed but generally there isn’t much time saving. However, there are questions being asked of traditional digital cinematography, especially in light of the rise of virtual capture and also the feature laden consumer smartphones — a LiDAR scanner on a consumer device as there is on the new iPhone 12 Pro was unthinkable even 12 months ago.

As part of a conversation with cinematographer Roger Deakins, Franz Kraus of ARRI has said that the future for professional TV and movie cameras will probably include some type of computational imaging element controlled by AI. There will be camera arrays that can capture a volume much like volumetric and light-field imaging can today. But he accepts that cinematography is a very conservative industry and so major development (apart from sensors) in the capture world will take time and lots of investment. Lens extended data systems like the ones from Zeiss and Cooke will develop and perhaps become more app-like if you’re looking to simulate lens types from the camera, but what about lenses themselves? Will they get smaller, and is there still the variety of legacy glass there once was? Waiting in the wings of course is liquid lenses, but that’s way off in the future.

The feeling is that as far as cameras are concerned, there will be more options to virtualize from the lens and initially those cameras might be regarded as specialty products with ultra high sensitivity and high rental prices to boot. The size of cameras will continue to decrease especially when attached to cloud services but also as more remote shooting is demanded.

And as for moving the camera, as we saw with The Lion King last year, director Jon Favreau and his team ignited virtual production and sweetened the pill by bringing in live action veterans like DP Caleb Deschanel to make the movie capture more realistic. You now have companies like Motion Impossible’s Agito remote controlled dolly system also drawing its real world movement into game engine technology. The Agito is an amazing new robotic device that will no doubt be seen soon on film and TV sets where such controllable remote heads is an easy choice for COVID-19 compliance. UK company Motion Impossible has just partnered with US re-seller Abel Cine to start distribution in the USA and has formed Motion Impossible US at the same time.

There will much more innovation to move the camera in the future, especially with new ways to stabilize them. But the launch of DJI’s Mini 2 drone at only 249g take-off weight reminds of us of the predictions of Steadicam inventor Garrett Brown over 10 years ago as he was thinking of new methods of shooting the London Olympics back in 2012. “I have a couple of shots in my hip pocket that I’d love to make. On our website we jokingly referred to a Molecam, which was a pure bit of whimsy, but I’ve actually had people calling me trying to book the Molecam. You know it digs underground when it hears some action and then pops up to shoot. I’d like to do a camera hiding under the in-field rail in racing, just 10 to 15 feet out in front of the lead horse, low and wide angle that’s also capable of sending a high-def image back somehow by fibre optic with microwave or something like that.”

“More miniaturization is needed, which allows our gadgets to be less conspicuous. I’ve seen talk in the last 10 years about robotic ‘Dragonfly’ type cameras. Dragonflies are interesting because of the double set of wings that gives it an inherently stable platform. I bet that in my lifetime there will be remotely controlled, directable cameras the size of Dragonflies that are buzzing along with every runner on the track looking over their shoulder. There’s nothing stopping that stuff from being the size of a fly. As soon as you have the microwave technology to get the image and discriminate it from all the other little flies you’ve sent out there. You need to stabilize the image and control it. I have a camera already in my iPhone that would fit cheerfully on-board a robotic Dragonfly with a lens 1/16th of an inch across at 2 megapixels.”

Our final supercharged technology spawned by the pandemic should be no stranger to anyone with a Disney+ subscription as there is a whole behind the scenes episode about it under the Star Wars banner: Virtual set technology using LED panels. Disney’s The Mandalorian Star Wars spin-off series used this method heavily and there’s news that The Batman, currently shooting at Warner Bros. in the UK, will be using it too.

To give the technology some context, back in 2013 DP Claudio Miranda and director Joseph Kosinski shot the Tom Cruise movie Oblivion. The team, apart from Cruise, had previously shot Tron where Claudio and his director tried their best to shoot as much in-camera as they could. For Oblivion, another VFX-heavy movie, they wanted to do the same.

In the movie there is what was known as a Sky Tower, which in the story is hundreds of feet the above the ground. Claudio wanted to avoid blue screen to shoot the Sky Tower scenes. “If we had done it blue screen then all your art department choices like textures would have to change. If it originally was going to be gloss it would have to be semi gloss. No chrome is allowed because it just ends up being blue. You could put chrome in, but the effects would have to go on top of it. But now we’ve got real chrome we can put the glass in and now we have a real environment. I can use smoke if I want to, I can do anything!”

They ended up using 21 front projectors to cover a width of 500 feet with a 15K background shot with RED cameras from a mountain in Hawaii. Bringing in-camera VFX up to date is the cohesive marriage of cameras, camera tracking, screen processing and game engines. The “volume wall” has the same idea as Claudio’s back in 2013 but is much smarter using game engines to render the VFX in real time. In fact you could say that VFX has become the new capture medium, as for The Mandalorian series there was a bank of post production artists changing the footage on the stage in real time sat on what is now called “The talent bench.” They prepare the scene for the action, a truly collaborative event, turning traditional set disciplines on their head.

This marriage of game engine VFX and screen processing is perfect for the times we are living through at the moment — big Hollywood stars, for instance, might be able to shoot their scenes without going on location, which would help calm nerves in the event of an infection spread. You could even use smaller volume studios to shoot low-cost reality shows or soap operas. It’s a huge production change and one we will be keeping a close eye on.

Read our interview with disguise’s CTO Ed Plowman about that company’s change of direction from live events to virtual production being based on LED screen and game engine technology:

disguise Unmasked

In light of recent events, disguise has pivoted their business from live events to virtual production. NAB Amplify asked disguise CTO Ed Plowman how he envisions the company’s next stage of development:

NAB Amplify: Virtual sets have been with us for a while, but non-keying technology with LED screens is in its infancy. How will it evolve? Is it a matter of being able to implement all that the game engines can offer you, or will the R&D race be about much higher resolutions but still with near-instant render of an engine plug-in? Are we waiting for on-screen technology to develop?

Ed Plowman: Yes, the technology is in its infancy. There are a number of use cases that haven’t been explored yet, including using the LED wall as an active lighting medium. This has been somewhat explored within the film industry using so-called LED caves to create realistic ambient lighting effects using the out of frustum portions of the display. However, we still have a long way to go in exploiting this technology to its full potential as LEDs as an output medium have the capacity for extremely high dynamic range. This will reach its peak when the LED screen processor, camera and media processing can act as a cohesive system. There is far more technology to explore and exploit in this area.

The camera is a key part of the overall system, which is often overlooked or poorly understood – – being able to tweak camera settings (shutter angle, exposure, etc.) to balance or match LED capability is something that is currently evolving. Our aim is to give maximum creative flexibility while maintaining the best possible quality. In order to do this effectively, we need to add some missing communication and data channels between these system elements.

In terms of the real-time graphics engines that drive the LED walls, it’s not so much a race to implement everything in the game engine, but the need to produce effects that look good enough in the camera while maintaining the real-time performance. The requirements of a game engine versus a real-time visual effects engine may be very different, but it’s often easier to scale up than down. There are also options in the visual expected effects space to scale using techniques such as cluster rendering, which aren’t really options for your average home gamer.

Screen technology will inevitably get better. Pixels will get smaller, the density will increase, walls will get larger; but this is only a small part of the overall system. To focus on this is to miss one of the key elements of LEDs as a display technology. The key exploitable benefit of LEDs is the fact that they are active lighting mediums.

NAB Amplify: What will the next five years be like for disguise? Will you become more of a media server software company supplying companies like White Light as expert installers, or will you be looking to push new capture techniques as they arrive with your hardware products?

Ed Plowman: I think as 2020 has proven, anyone’s five-year plan can change in an instant! The key thing is to keep an open and agile approach to innovation and development. The disguise long-term objective is to become the platform to bring different visual computing technologies into the hands of creative people and make them work to their benefit. We have a long history of innovation and are definitely looking to continue that innovation into new areas both on the hardware and software side.

NAB Amplify: I can see most production companies investing in some kind of volume studio. How long will this technology take to break into the mainstream and be as affordable as, say, a small edit suite is — sub-$10,000?

Ed Plowman: Technology affordability is connected to the return on investment you can get from the initial outlay. I’m not quite sure we will ever see a fully functioning system under $10,000, but never say never, right? I think in terms of breaking into the mainstream and being as pervasive as techniques such as green screen/chroma key, we could definitely see that happening within a 2-3 year window.

NAB Amplify: Can you see other game engine technology being introduced than just Unreal, Unity and Notch, or are their development programs just too advanced?

Ed Plowman: The real-time graphics engines you mention certainly have a head start in this area, but games have been using cinematic sequences as part of their narrative/storytelling for some time. There are some very interesting in-house technologies out there and the investment in them is significant. Game development houses also own IP in the form of visual assets, so it’s not unthinkable that they might want to use both the assets and engine expertise to add another revenue stream to support that investment. Some even have access to content beyond gaming, which has an inbuilt fan base (e.g. Rebellion’s 2000AD/Judge Dredd portfolio).

Born in 2000 out of a pioneering new technology, disguise was first developed to deliver large visuals at concerts for the likes of Massive Attack and U2. Today, disguise is a global operation with offices in London, Hong Kong, New York, Los Angeles and Shanghai, with technology sold in over 50 countries.