TL;DR

- The World Economic Forum highlights the dangers of algorithmic-driven social media feeds and deepfakes in its 2024 Global Risks Report, citing misinformation as the main issue impacting global politics and security over the next two years.

- With elections coming in the US, India, Mexico, Indonesia, the UK, and the EU in 2024, monitoring online activity for dis- and misinformation is essential — but unlikely to work.

- False information and societal polarization are linked, the WEF reports, with potential to amplify each other. There are moves to counter, regulate and monitor social media networks in the works, but these could be “too little, too late.”

READ MORE: Global Risks Report 2024: The risks are growing — but so is our capacity to respond (World Economic Forum)

In 2024, we will face a grim digital dark age, as social media platforms transition away from the logic of Web 2.0 and toward one dictated by AI-generated content, says Gina Neff, executive director of the Minderoo Centre for Technology and Democracy at the University of Cambridge. Writing for Wired, she says online trust will reach an all-time low thanks to unchecked disinformation, AI-generated content, and social platforms pulling up their data drawbridges.

READ MORE: The New Digital Dark Age (Wired)

Her view is echoed in a new report by the World Economic Forum, which highlights risk of AI-generated mis- and disinformation in exacerbating a cost-of-living crisis and socio-political polarization.

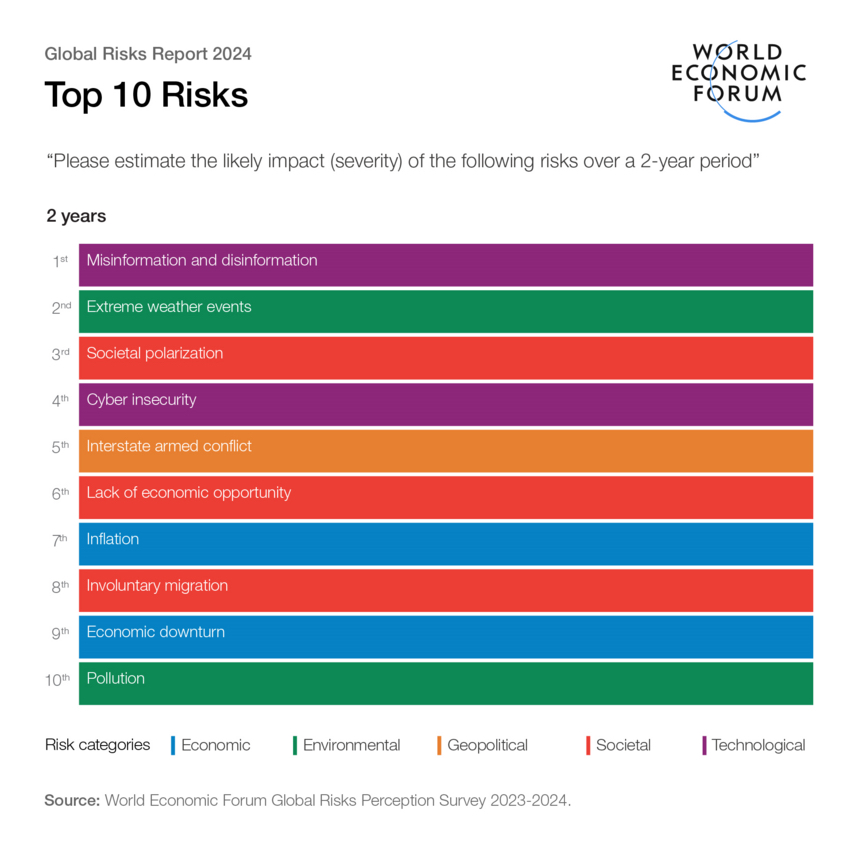

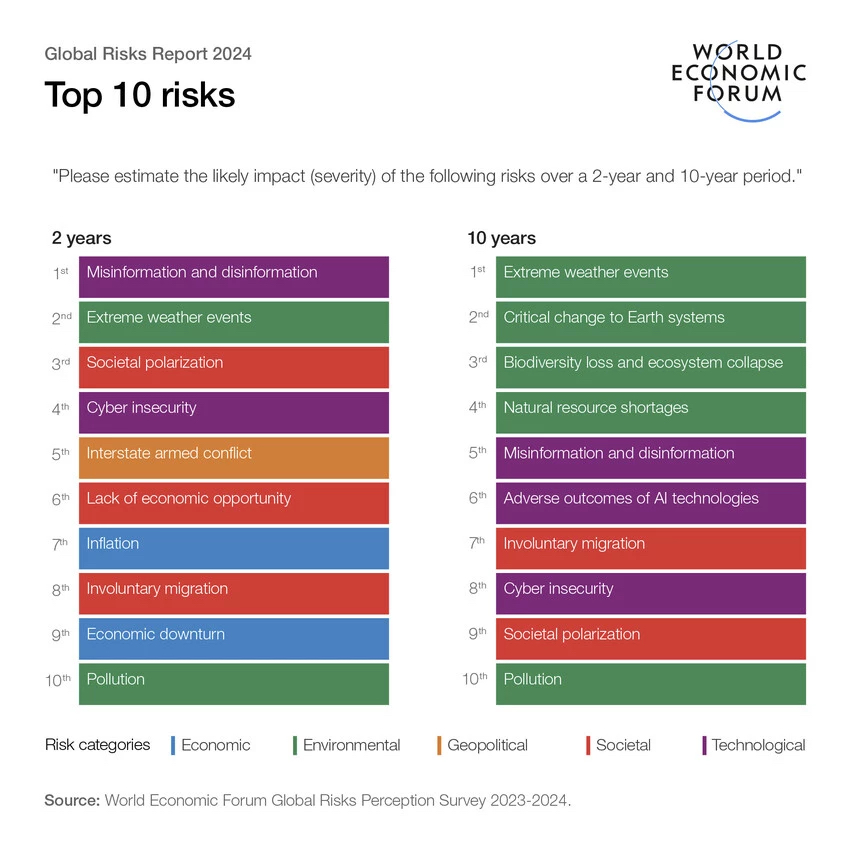

The WEF’s 2024 Global Risks Report is based on the views of 1,500 global risks experts, policy-makers, and industry leaders. It finds that the world’s top three risks over the next two years are false information, extreme weather, and societal polarization. Read the report here.

The threat posed by mis- and disinformation takes the top spot in part because of just how much open access to increasingly sophisticated technologies may proliferate, disrupting trust in information and institutions.

“The boom in synthetic content that we’ve seen in 2023 will continue, and a wide set of actors will likely capitalize on this trend, with the potential to amplify societal divisions, incite ideological violence, and enable political repression,” said Saadia Zahidi, MD and head of the Centre for the New Society and Economy at the WEF.

What’s more, false information and societal polarization are linked, with potential to amplify each other. Zahidi said, “Polarized societies may become polarized not only in their political affiliations, but also in their perceptions of reality. That can have a profound impact on many crucial issues ranging from public health to social justice and education to the environment.”

These trends are occurring at a time of heightened economic hardship for many people around the globe. Together, this “potent mix” of economic distress, false information, and societal divisions can create challenges for many societies, “providing fertile ground for continued strife, uncertainty, and erratic decision-making,” the WEF warns.

This has broad repercussions for the long-term outlook. A decade from now, according to the WEF’s Global Risks Report, the top three risks are all related to the climate emergency: extreme weather, change to Earth systems, and biodiversity loss. Mis- and disinformation stays high on the agenda at number five, followed by other adverse outcomes of AI technologies at number six, and involuntary migration at number seven, while societal polarization also stays in the top 10.

In response to the uncertainties surrounding generative AI and the need for robust AI governance frameworks, the Forum has launched the AI Governance Alliance.

The aim of the Alliance is to unite industry leaders, governments, academic institutions, and civil society organizations to champion responsible global design and release of transparent and inclusive AI systems.

Benjamin Larsen, the WEF’s Lead on AI and ML, says, “Sustained dialogue lays the groundwork for greater cooperation and a potential reversal of digital fragmentation.”

Neff laments the shut down in access to user data on social media sites like Twitter or Facebook. “Companies have rushed to incorporate large language models into online services, complete with hallucinations (inaccurate, unjustified responses) and mistakes, which have further fractured our trust in online information,” she says.

To clean up online platforms and prevent the excesses of polarization she calls for the adoption of the STAR Framework (Safety by Design, Transparency, Accountability, and Responsibility) that she says would ensure that digital products and services are safe before they are launched; increase transparency around algorithms, rule enforcement, and advertising; and work to hold companies both accountable to democratic and independent bodies, and responsible for omissions and actions that lead to harm.

The EU’s Digital Services Act is another step in the right direction of regulation, but its capacity to ensure that independent researchers can monitor social network platforms will take years to be actionable. The UK’s Online Safety Bill — slowly making its way through the policy process — could also help, but again, these provisions will take time to implement.

Until then, Neff says, “the transition from social media to AI-mediated information means that, in 2024, a new digital dark age will likely begin.

Discussion

Responses (1)