Ridley Scott’s Blade Runner was set 37 years in the future, in 2019. It’s the film that launched a thousand on-screen imitators but how does its actual dystopic depiction match up to reality?

As with Minority Report — another Philip K. Dick film adaptation — there are dozens of ways the film got future tech right, nearly right, and way off the mark. Here are a few:

PC Production Design

In 1982 the first PCs — like the Commodore 64 — were coming to market. Tron, made with ground breaking computer graphics, was released in cinemas that year. Before the opening titles of Blade Runner, the Ladd Company logo is animated to resemble green pixels crossing a computer screen in a concept that became the aesthetic DNA of The Matrix 17 years later.

The opening sequence of a dark cityscape and industrial explosions is based on Scott’s memory of growing up around the heavily metal factories of the North East of England; made real by Doug Trumbull’s stunning in-camera visual effects.

More Human Than Human

Replicants —genetically engineered humanoids or “skin jobs” — are the heart and soul of Blade Runner. To the best of our knowledge we aren’t sharing our jobs, homes and lives with any yet but in the intervening years visual effects techniques have evolved to deliver convincing (and controversial) facial or full body performance recreations of actors living and dead.

Exhibit A in the former camp is the digital body double for original actress Sean Young in Denis Villeneuve’s sequel Blade Runner 2049. That Young was considered unsuitable to play an older generation of herself while 74-year-old Harrison Ford got to continue as Deckard — and yet both could be replicants, therefore not aging surely? — perhaps wouldn’t have been greenlit if #metoo was as prominent as it became after the film’s release.

In the latter camp, see Peter Cushing and Carrie Fisher in the Star Wars series or James Dean in war movie Finding Jack.

READ MORE: Resurrecting James Dean Has Begun a Concerning Trend (Film School Rejects)

Video Phone

A longtime trope of science fiction including in films like 2001: A Space Odyssey on which Trumbull also worked, being able to see the person you are calling seemed like a novelty until only a few years ago.

Now, we’re all it. The pandemic has shown us that people are comfortable in their digital individual lives. Blade Runner’s retro-future though had Deckard dial up manually and it apparently costs him $1.25.

Flying Car

Deckard’s cop cruiser is so cool I had to take this picture of (apparently) the actual model used in the film, on show at Universal Studios Orlando in 1989.

Perhaps the nearest we can get today to the experience is the automated driverless cars being trailed around the world by Tesla and others.

Manned electric quadcopters have been proposed for use as taxis in Dubai, while automotive makers including Toyota, Fiat and Hyundai have prototyped Vertical Take-Off and Landing Craft similar to Deckard’s. GM debuted its version at this year’s virtual CES. Hyundai says it’ll have regional air mobility operating between cities by the 2030s.

READ MORE: Dubai Drone Taxis and Flying Cars in Dubai (UAE Quadcopters)

READ MORE: Yes, GM Showed a Flying Car at CES! (Autoweek)

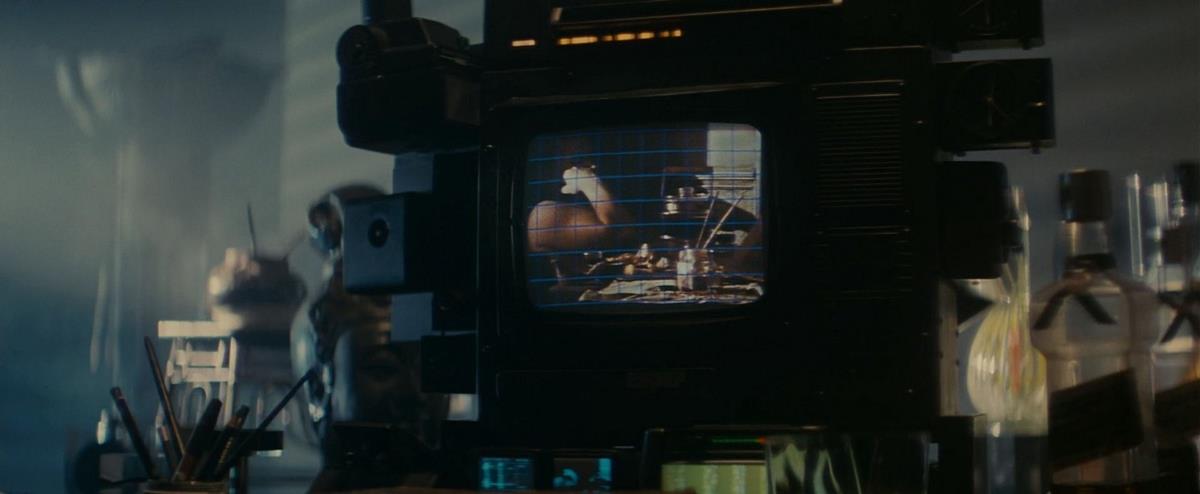

Image Manipulation

Perhaps the most intriguing tech is Blade Runner’s Esper machine which is seemingly able to create a parallax in a 2D image and look around the walls of a bathroom to reveal something not previously apparent.

RedSharkNews suggest the machine is using some sort of artificial intelligence to fill in the gaps and points out that AI image reconstruction shouldn’t always be relied on for objective truth.

“More advanced AI codecs might make that sort of mistake even easier to make, and even more convincing, considering what AI can already do with faces. Work is being done on AI ethics, and a good thing, too, though it’s easy to imagine commercial expediency outpacing regulatory efforts in the field.

The Esper Machine is also voice controlled — something that seems matter-of-fact now with our AI voice assistant commands.

READ MORE: Blade Runner was set in 2019. How good were its technological predictions? (RedSharkNews)

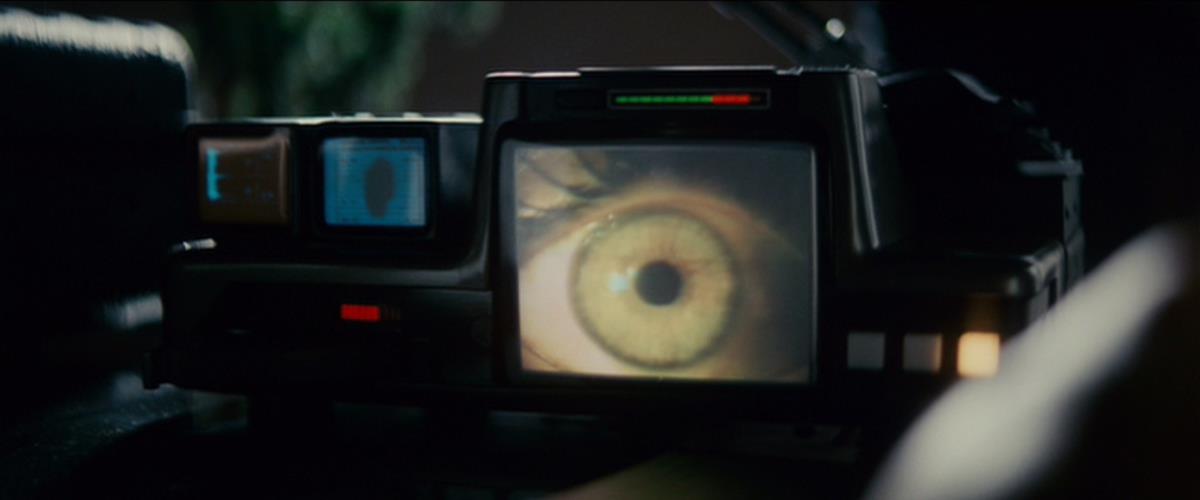

Proving Your Reality

More sinister is the Voight-Kampff machine designed to interrogate people by obscure question and answer to determine whether they are replicant. In gauges their response by the dilation of their pupil. In essence it judges whether they “feel” beauty or pain like a human and whether therefore they are the sum of their implanted memories.

There’s a whole philosophical game to play here about the duality of existence which thinkers from Plato to Descartes have contemplated and failed to solve.

If you think you’ve an answer — how do you know? With human kind about to take a deep dive into the simulated worlds of the Metaverse the matter is going to get a lot more complicated.

Meterological Not Technological

In Scott’s version of 2019 Los Angeles it rains. A lot. That hasn’t come to pass yet in Los Angeles. But there’s still time yet, with climate change.

Minority Report Watch

Like Blade Runner, Steven Spielberg’s 2002 film Minority Report also had autonomous vehicles and giant, personalized neon animated billboards, as well as the ability for detectives to scrub through the timeline of personal memories — video that can be manipulated to reveal more than is apparent on the surface.

Pre-cog, the ability to “see” crime before it’s committed is the Orwellian feature at the film’s core. Yet just this week it’s been reported that the Chinese state is using AI and facial recognition to reveal the emotional state of its citizens. In this case, the camera has been tested on Uyghurs in Xinjiang.

The advanced version of a lie detector analyses minute changes in facial expressions and skin pores and is intended, according to a witness for “pre-judgement without any credible evidence.”