READ MORE: Why PXO created a real-life ‘Holodeck’ for ‘Star Trek: Discovery’ (Unreal Engine)

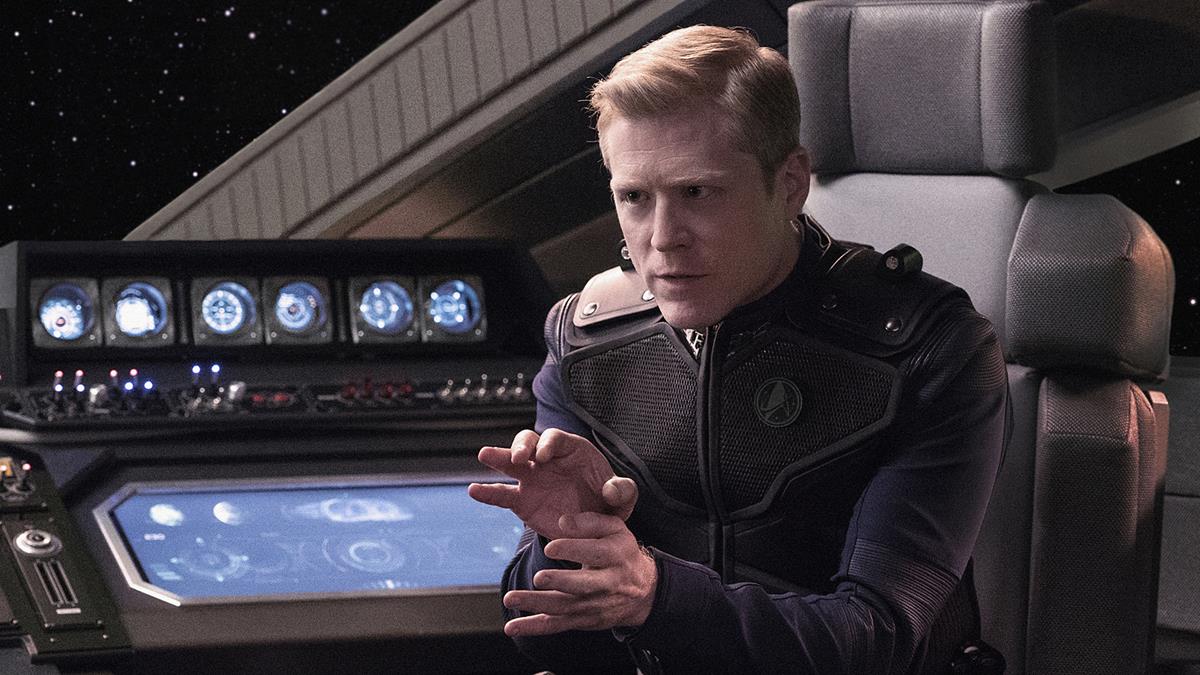

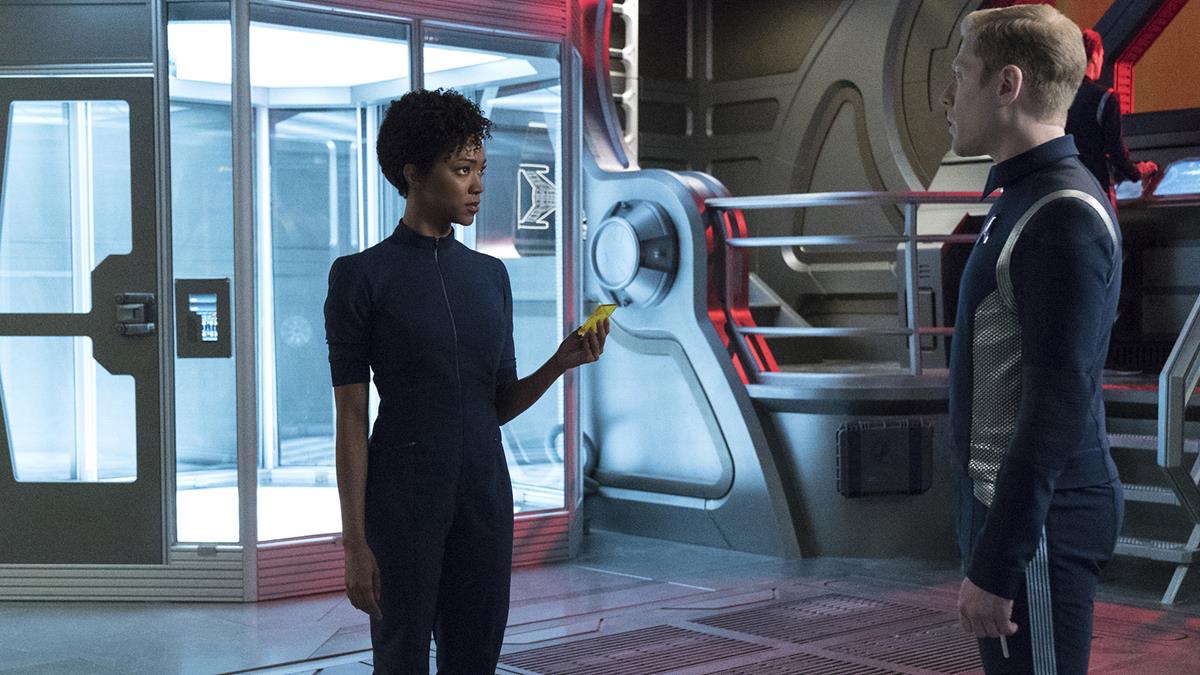

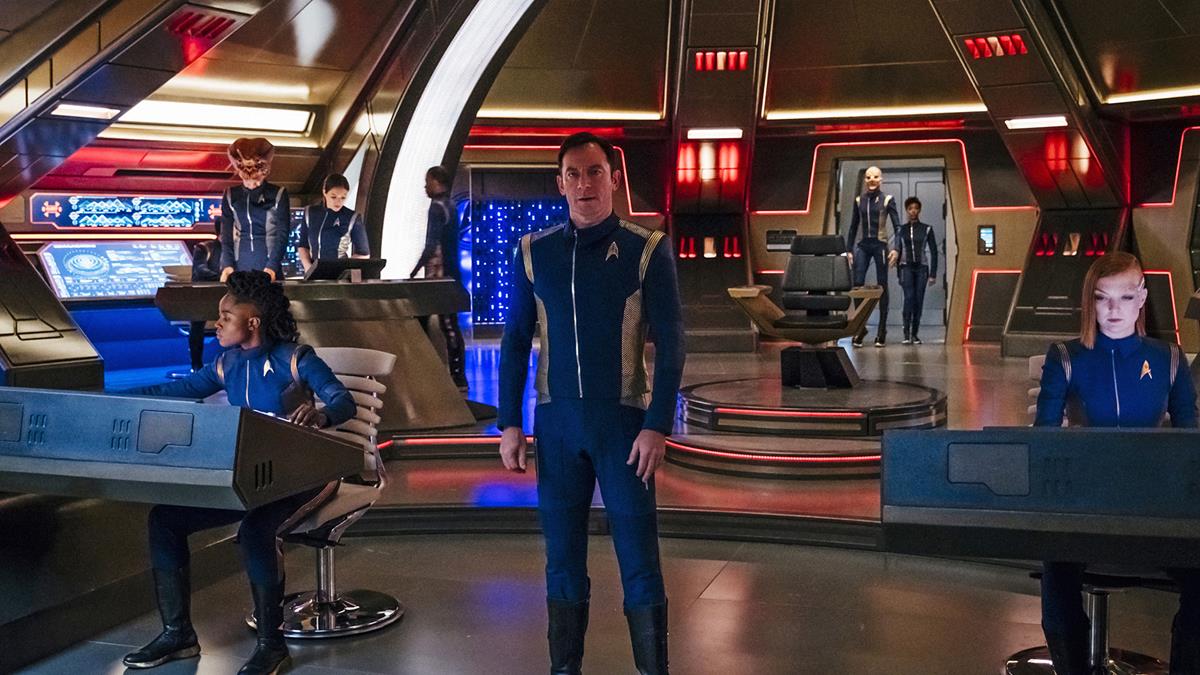

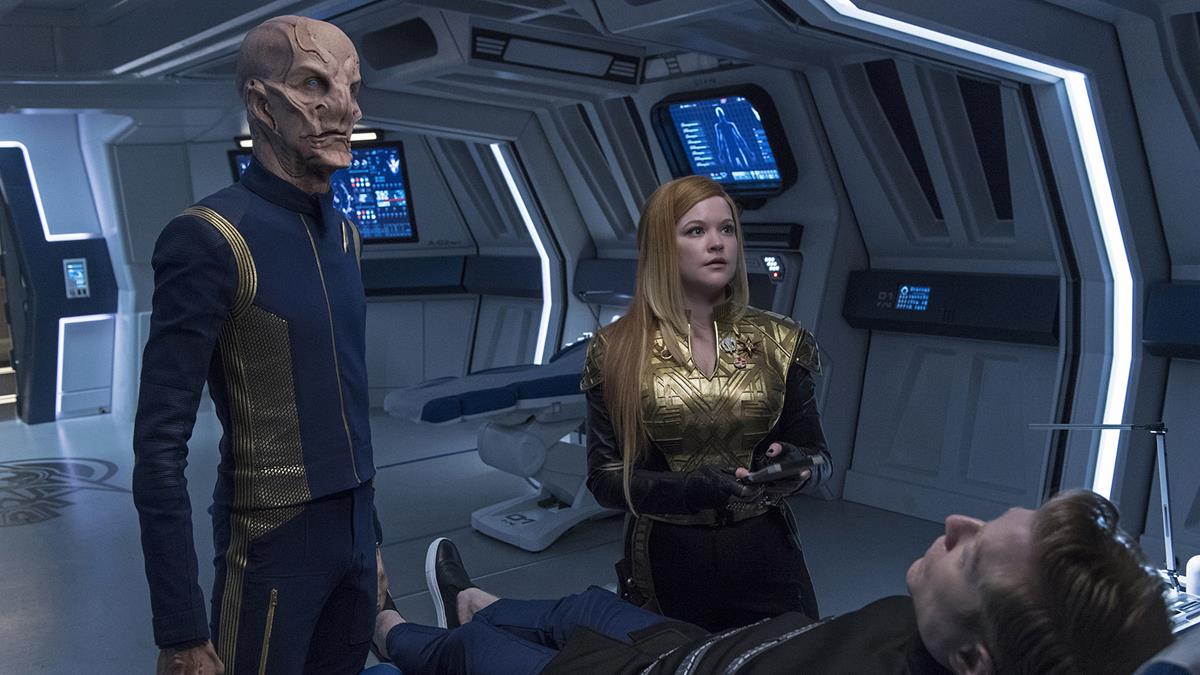

The latest season of Star Trek: Discovery boldly goes where no previous Star Trek series has gone before.

For the fourth season of the Paramount franchise, the producers turned to virtual production. VFX house Pixomondo (PXO) has even nicknamed it virtual production stage in Toronto the “Holodeck.”

The stage includes an LED wall outfitted with 2,000 panels, with another 750 panels on the ceiling, and measures 72 x 85 x 24 feet. More than 60 OptiTrack cameras surround the stage to provide tracking, and it’s all run by over 40 high-end GPUs running Unreal Engine’s nDisplay to synchronize the 2,750+ panels.

Nearly every episode of the 13-part season has at least one scene shot against the AR wall, details a production case study on the Unreal Engine site. Environments range from familiar locations like the Discovery shuttle bay to fantastic locations like the Kaminar Council Chamber, located deep underwater.

Watch this: PXO’s work on “Star Trek: Discovery”

To create each new environment for the AR wall, the process begins in pre-production, with PXO’s virtual art department creating prototypes for each environment. To quickly create a rough representation for blocking, the team sources elements from the Epic Games Marketplace, adapts them as needed, and adds them to the scene. All the assets were created in Unreal Engine.

“Using a procedural approach directly in Unreal Engine ended up being the better approach,” said VFX supervisor and PXO head of studio Mahmoud Rahnama. “Not only did this allow for much higher-resolution assets in UE, it yielded similar results to our offline render, but also dramatically reduced our average offline render time.”

Rahnama says PXO was able to totally abandon its offline rendering approach to cleanup work, one of the most thankless — but necessary — parts of any VFX artist’s job. That also created a domino effect, freeing up other groups across the board.

“It’s great being able to focus the team’s attention on the shots they feel excited to work on rather than some of the more mundane work. That is one of the many creative benefits for artists working in virtual production.”

The lighting team especially saw a major shift. During the first season of Discovery it could take up to 14 hours for a lighting bake to finish. Using Unreal Engine’s GPU Lightmass Baker, high-quality bakes can be done in as little as 30 minutes.

One of the biggest changes, however, is somewhat intangible. In previous seasons, each location would be divided into multiple tiles, and individual tiles would then be assigned to an artist. Once the tiles were complete, they were reviewed independently and the complete environment was only assembled for the final batch of reviews.

During Season 4, PXO’s artists could all work in the same scene simultaneously. That not only led to a more cohesive and consistent environment, it also enabled artists to collaborate and inspire one another. Plus, as an added bonus, according to Rahnama, “when artists know people will see their work on a daily basis, it tends to significantly improve organization.

“Right now, the speed at which you can shoot a sequence and cut it together with 80% of the shots completed and not have to wait months to see final VFX is a game changer. Soon, it’s going to be a standard way to get everything out the door, and onto people’s screens, even faster.”

A number of new shows are using VP as the basis for production. Among them Star Trek: Strange New Worlds (see below) and Game of Thrones prequel House of the Dragon for HBO.

The Holodeck a PXO can’t conjure a Klingon bat’leth out of thin air just yet, but give it time.

A Brief Voyage Through the History of Virtual Production

While virtual production is definitely having a “moment” in Hollywood and beyond, VP technologies and techniques have by no means just appeared overnight, cinematographer Neil Oseman observes in a recent blog post. The use of LED walls and LED volumes — a major component of virtual production — can be traced directly back to the front- and rear-projection techniques common throughout much of the 20th century, he notes.

Oseman takes readers on a trip through the history of virtual production from its roots in mid-20th century films like North by Northwest to cutting-edge shows like Disney’s streaming hit, The Mandalorian. Along the way, he revisits the “LED Box” director of photography Emmanuel Lubezki conceived for 2013’s VFX Academy Award-winner Gravity, the hybrid green screen/LED screen setups used to capture driving sequences for Netflix’s House of Cards, and the high-resolution projectors employed by DP Claudio Miranda on the 2013 sci-fi feature Oblivion. Oseman also includes films like Deepwater Horizon (2016), which employed a 42×24-foot video wall comprising more than 250 LED panels, Korean zombie feature Train to Busan (2016), Murder on the Orient Express (2017), and Rogue One: A Star Wars Story (2016), as well as The Jungle Book (2016) and The Lion King (2018), before touching on more recent productions like 2020’s The Midnight Sky, 2022’s The Batman and Paramount+ series Star Trek: Strange New Worlds.

READ MORE: The History of Virtual Production (Neil Oseman)

NAB Show New York Introduces New Cine Live Lab

By NAB Amplify

NAB Show New York is introducing the Cine Live Lab, a new destination on the show floor featuring daily, hands-on demonstrations of the latest tools and techniques in cinematic storytelling and live broadcast production. Presented in partnership with AbelCine, the Cine Live Lab is open to all NAB Show New York badge holders and will take place October 19-20 at the Javits Center.

Designed to highlight the synergies between cinematic and broadcast style production, the Cine Live Lab will feature three premier sessions from AbelCine. Presentation topics include managing cinematic multi-cam projects, audience experience goals, identifying production team member roles, as well as equipment and skillsets required of crews.

Additional sessions will teach techniques in camera operations needed to get the best shot, from lensing and focus to set up and framing. Leading companies driving advancements in content creation and cinema will demonstrate their wares. Supporting partners also include Sony, Fujinon, Reidel and Multidyne.

Sessions include:

- Cinematic Multi-Cam Production: Raising the Bar

- Multi-Cam Production: An Exploration of Key Roles

- Cine Live Fiber Camera Adapter 101

- From Stage to Screen: A Cinematographer’s Perspective

“We are in a transformative time in which cinematic storytelling tools and techniques are being applied to live performance and broadcast productions. The approach that is emerging on these projects is a combination of cinema and broadcast production talent and disciplines. We are pleased to present Cine Live Lab to foster these discussions and bring the creative community together,” said Pete Abel, co-founder and CEO, AbelCine.

“We are excited to offer this dedicated area of the show floor exclusively at NAB Show New York for the cine and broadcasting community to gain exposure and hands-on experience with the latest equipment transforming production and post workflows,” said Chris Brown, executive vice president and managing director of Global Connections and Events at NAB.

The Cine Consortium launched in November 2021 in Los Angeles to help NAB Show and its affiliated events identify opportunities, such as the Cine Live Lab, that serve to educate and unite the cinema, production, post and broader content creation communities. Members include studios, guilds, societies, and technologists.

Want more? In the video below, learn how Pixomondo used Unreal Engine’s virtual production tools alongside a massive AR wall to create the unique environments for Star Trek: Discovery:

Discussion

Responses (1)